5,127 Layers

The Gap Between Capability and Adoption

Weekly writing about how technology and people intersect. By day, I’m building Daybreak to partner with early-stage founders. By night, I’m writing Digital Native about market trends and startup opportunities.

If you haven’t subscribed, join 70,000+ weekly readers by subscribing here:

5,127 Layers

When I think about the future of tech, I sometimes think about Coruscant in Star Wars.

Casual fans know Coruscant as the city planet that’s the capital of the Galactic Empire. It houses the Jedi Temple and the Senate chambers of the Republic.

Less casual fans (read: nerds like me) know that Coruscant is actually comprised of thousands of layers. As Coruscant grew in size, the planet expanded outward, building new layers on top of old layers. The planet has 5,127 distinct urban layers and the total depth of the planet, from the outermost skyscrapers down to the original surface, is 22 kilometers. This means the average layer is ~4.3 meters or ~14 feet.

Most of the Star Wars plot takes place on the uppermost layer, which the Coruscant elite call home (they’re the only people privileged with access to the sky), but there’s a vast underworld below. Coruscant’s population is typically cited in the canon as 3 trillion (!).

Why is Coruscant a good metaphor? To me, the planet represents sheer accumulation: a civilization that’s built so much, for so long, that its beginnings are unrecognizable, buried under layers of change. Coruscant embodies compounding complexity and ambition.

I was thinking of Coruscant last week when Elon Musk announced that SpaceX would acquire xAI. From the official press release:

In the long term, space-based AI is obviously the only way to scale. To harness even a millionth of our Sun’s energy would require over a million times more energy than our civilization currently uses!

The only logical solution therefore is to transport these resource-intensive efforts to a location with vast power and space. I mean, space is called “space” for a reason. 😂

Aside from the use of the 😂 emoji in a corporate press release, the most jarring part of those sentences is their sheer ambition. Data centers in space? We are quite literally expanding outward, building new layers on top of old ones, all towards Musk’s grand vision of becoming an interplanetary species. And we’re clearly living in a world of compounding complexity.

We see this in AI’s progress too. Claude Opus 4.6 and GPT-5.3-Codex are insane, and I feel like I need my weekends just to catch up on new AI capabilities.

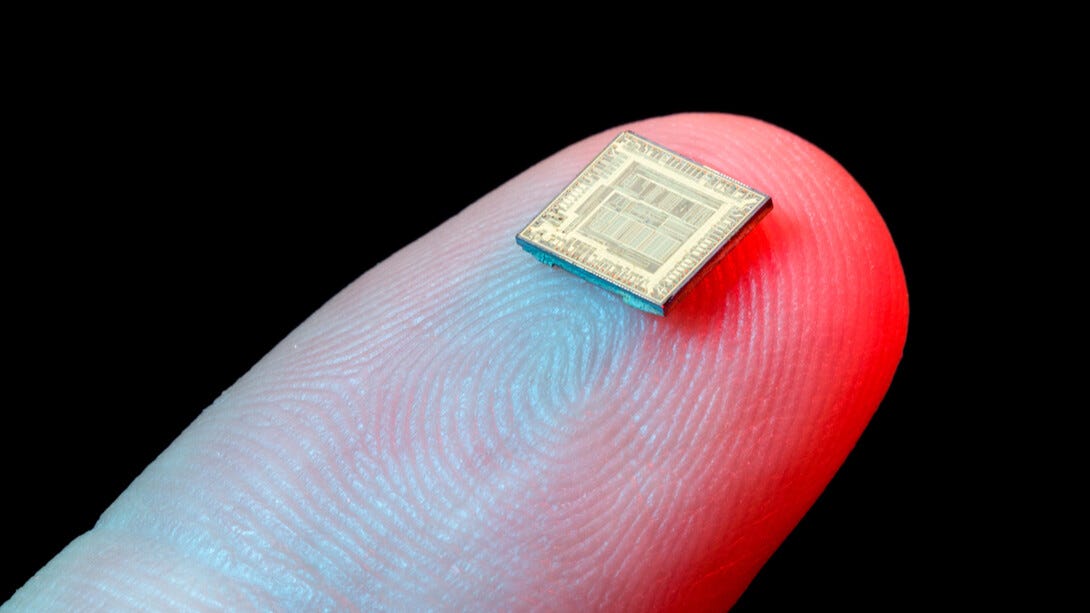

For decades, Moore’s Law ruled tech. Moore’s Law centers around hardware, holding that the number of transistors on a chip will roughly double every two years. Moore’s Law has held for decades, though it’s slowed as we approach the physical limits of silicon.

AI scaling laws, meanwhile, describe how the performance of neural networks will improve as we increase compute and model size/weight. Scaling laws are super-exponential, with compute and capabilities doubling every ~6 months (even faster now). A couple years back AI couldn’t get fingers right, and now we can’t separate fact from fiction.

But even with AI’s rapid progress, I think we’ve been getting ahead of ourselves. Will Manidis wrote a good piece on X last week called End Game Play. His main contention was that all the bold declarations in tech, particularly those from Musk, seem to allude to a preordained end state. Sure, we’ll eventually have data centers in space and civilizations on Mars, but how do we get there? What about the messy middle? The middle state is often (very) long.

This is how I’ve been feeling. All this change, all at once, is thrilling. Back in 2011, Peter Thiel famously said, “We wanted flying cars, instead we got 140 characters.” Now, in a terrific bout of irony, Twitter—the company referenced in that statement— lives within a company building data centers in space. How poetic is that? Peter Thiel is getting his wish, and it really does feel like we’re living inside a sci-fi novel.

But I think we’ve been conflating “AI can now do X” with “AI will now do X,” which are very different things on very different timescales. There’s a yawning gap between capability and adoption.

The Gap Between Capability and Adoption

If we lived in a perfect world, maybe capability and adoption would progress in tandem. But we live in a very imperfect world, one with regulatory drag and organizational bloat and people saying “But that’s the way we’ve always done things.” Lately it feels like we’ve been trying to leap from the Coruscant surface to the uppermost layer, without building the 5,127 layers in between.

I think it will take a long time for AI to really upheave everyday life. The essay du jour this week is Matt Shumer’s Something Big Is Happening. I liked the piece; he’s right that big things are happening! But I also think Shumer is a bit alarmist. Societal change lags technological change—by a lot.

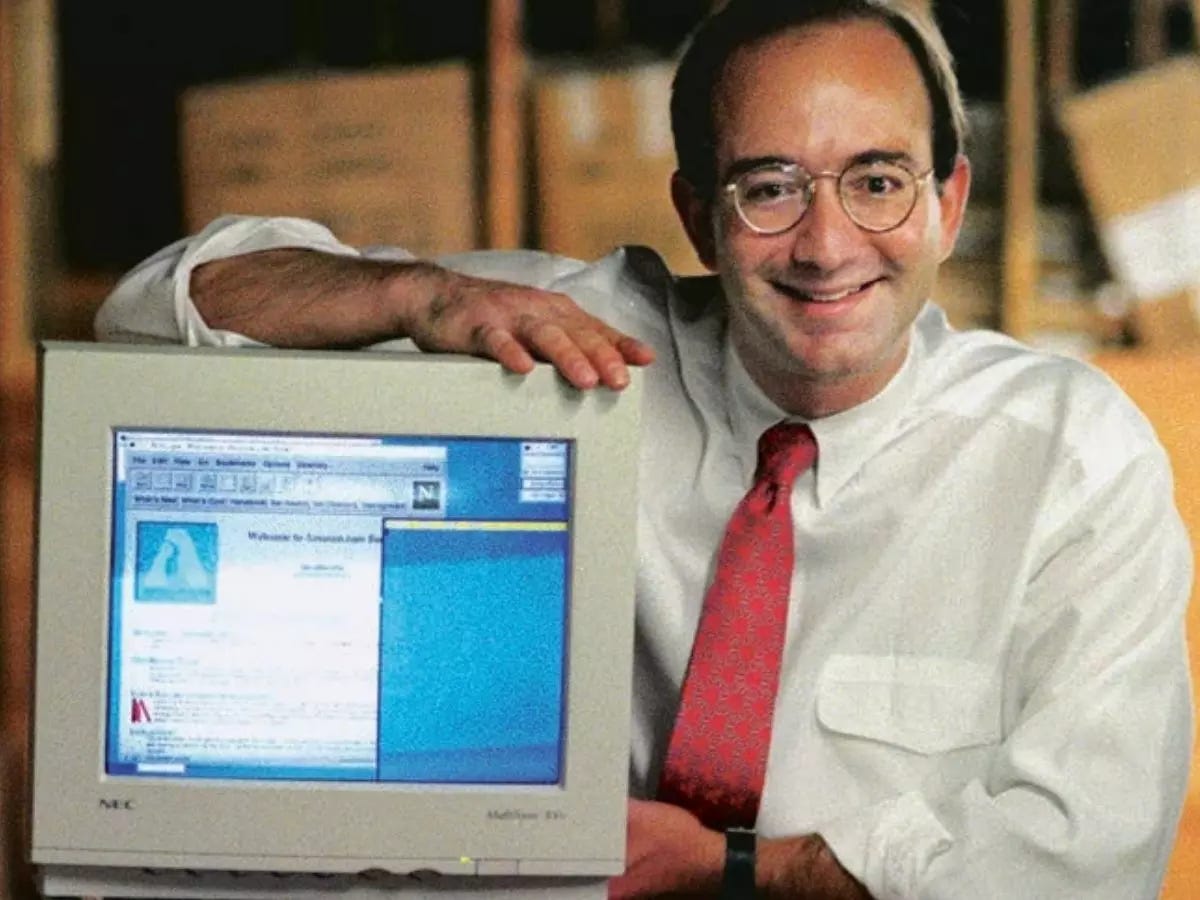

In the fall, we wrote It’s Still 1995 to make the point that we’re still (very) early in the AI super-cycle. The metaphorical Jeff Bezos, that piece argued, still looks like this.

In that piece, we pointed to examples of tech adoption taking a long time:

A quarter-century after Amazon’s IPO, only 20% of our shopping happens online.

Only 60% of corporate data is stored in the cloud.

We’ve been talking about cord-cutting for years, yet 50% of households still have cable.

Only 6% of eligible transactions use Apple Pay, while mobile payments have a 15-20% share overall; in 15% of transactions, we still use cash!

EVs have been the rage for years but comprise only 10% of car sales.

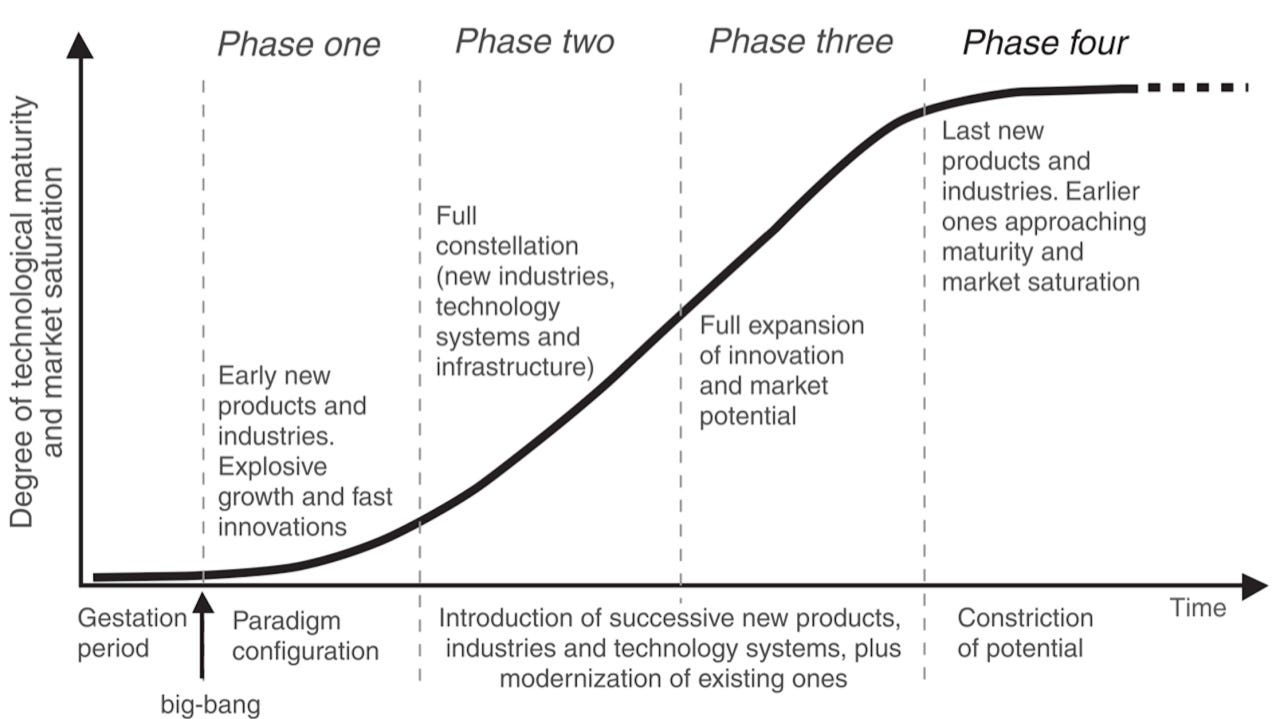

And so on and so on. Change takes time. If you study Carlota Perez’s theory on ~50-year tech-cycles, we’re still in “Phase One” of AI, which should last about a decade. If you consider the Big Bang event to be the launch of ChatGPT (November 2022), we’re only ~3 years in.

Lately, we’ve been acting like we’re in Phase Three. I think this is partly because there’s such a gaping crevasse between tech-savvy early adopters (most of the people reading this essay) and everyone else; only 1 in 3 Americans has used ChatGPT! We talked about scaling laws. Scaling laws describe capability acceleration, but adoption has no such law; it’s governed by human and institutional friction.

Because things have been changing so fast, there’s been a lot of hand-wringing. The last few weeks have brought declarations that software is dead, that the labor markets will go into turmoil, that life will soon be unrecognizable. I disagree.

Here are three of my views on how things play out from here:

1) AI will eat labor, but not all at once. In the near-term, augmentation trumps automation.

US SaaS revenue is around $300B. The US labor market is $13T, or 43x bigger. This is the market that everyone expects AI to devour.

This will happen, and it’s already started. The past few weeks have been the Big Bang for code generation specifically, and the days of humans writing code may be over.

But this doesn’t mean we won’t need engineers. To the contrary, I expect the demand for engineers to go up. In last month’s The Year of Invisible AI we talked about Jevon’s Paradox, which contends that as a good becomes more accessible, demand for that good increases. More and more companies will need engineers to orchestrate agents, and those engineers will be able to accomplish in days (hours?) what used to take months. Here’s the Moltbot founder’s set-up, which more terminals open than I can count.

Software development is the 3rd-largest category of labor spend in the US, after legal (2nd) and healthcare admin (1st). We’re going to see all these categories reinvented.

In the near term, this will mean more augmentation than automation. Hiring will slow, but I don’t think we’ll see mass layoffs. We’ll see enormous productivity gains per worker, though even that will take time: most people will have to be nudged toward AI tools (and trained on how to use them).

This is why “forward deployed” roles are so in vogue right now. Companies need people to help with implementation because implementation is, well, the gap between capability and adoption.

2) Software isn’t dead, but it’s changing. You still won’t get fired for buying IBM, but disruption is coming.

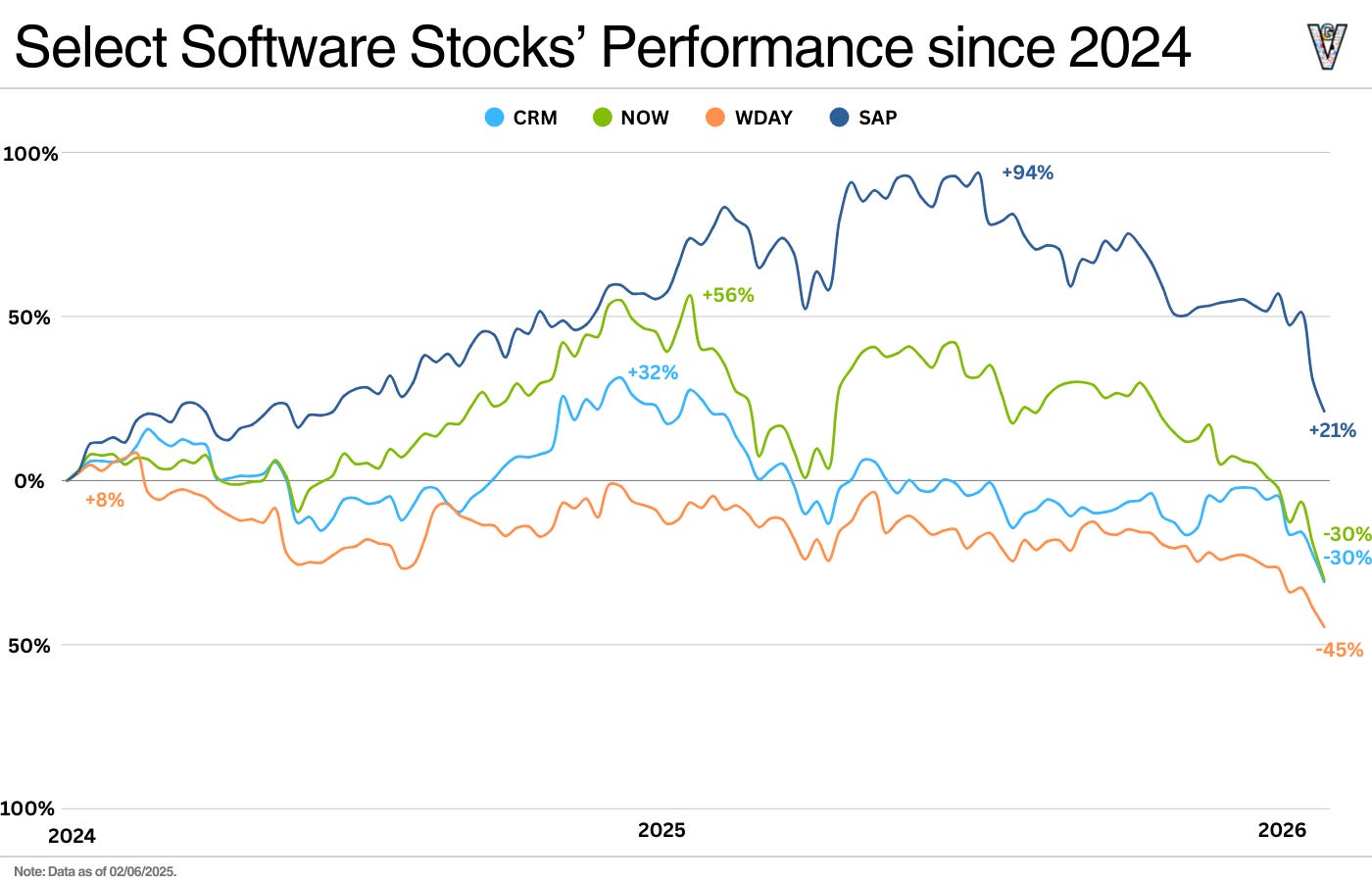

The last couple weeks have been rough for SaaS:

But software won’t die. It’ll evolve into a new normal. Software stocks have traded at premium multiples because they enjoy 90% gross margins. In my mind, the new normal is 70% margins with inference costs boosting COGs. The market is waking up to this and pricing it in.

That said, I don’t think these software companies are going anywhere any time soon. The old saying goes, “You don’t get fired for buying IBM.” Anyone who has ever talked to a procurement team knows that the software stack ain’t changing any time soon. But every software company should certainly be disrupting itself—and most won’t, because of the Innovator’s Dilemma: disruption would upset current customers, of course! So slowly, over many years, AI-native competitors will eat up share.

Here’s a slick demo video from the Day.ai website. Day is an AI-native CRM, and it’s sure as hell a lot more beautiful and AI-forward than any incumbent CRM. But it’s going to take a long time for the old guard to die.

Jared, our new Daybreak partner, was saying to me this week: everyone basically has to be a vertical Rippling now. What he means by that is that the old vertical SaaS playbook is dead: find the killer feature, land with that feature, then expand from there. In a world of AI, you basically need to solve every one of your customer’s problems from the start. Software isn’t dead, but different. It’s lower-margin, it’s AI-native, and familiar playbooks are being reinvented in real time.

3) Interface is the true battleground.

About three years ago, I wrote a piece called AI Is an Interface Revolution. The core argument: AI chatbots will become the primary interface through which users interact with businesses and services, and chatbots strip away brand visibility, devalue specialized UIs, and commoditize the underlying providers.

That piece focused primarily on consumer examples like booking a trip through ChatGPT vs. Airbnb. But we’ve actually seen the interface battle waged even more in enterprise. This is why software stocks are down: if you’re just a pretty B2B interface, you’re screwed; Anthropic, OpenAI, et al will eat your lunch. You’d better have proprietary data to survive.

The companies that will win combine interface + data. The things that make a CRM valuable aren’t drop-down menus, but rather treasure troves of customer interactions and relationship context. While AI replaces UIs, proprietary datasets offer formidable moats: this is one reason companies like Veeva in life sciences or Toast in restaurants should be more durable than horizontal tools.

The irony, as we called out in that 2023 piece with the Airbnb brand example, is that tech companies have competed for years on UX. Now the winning interface might be no interface (aka just a chat window or an agent) and your hard-earned design is moot. This is an unlock for companies sitting on rich datasets, and it’s an existential threat to companies whose value was primarily in the interface.

Final Thoughts: Inequality & Underworlds

We’re probably on level two or three of Coruscant, and each of the items above is a level we need to cross: we need to figure out how AI will supercharge labor; we need to figure out what happens with legacy software; we need to figure out how to compete on interface and data. We have a long way to go until we reach the upper levels of a society unrecognizable from today’s.

One other (sinister) way the Coruscant metaphor is relevant: the layering of society. Here’s what one Reddit user has to say about Coruscant’s lower levels:

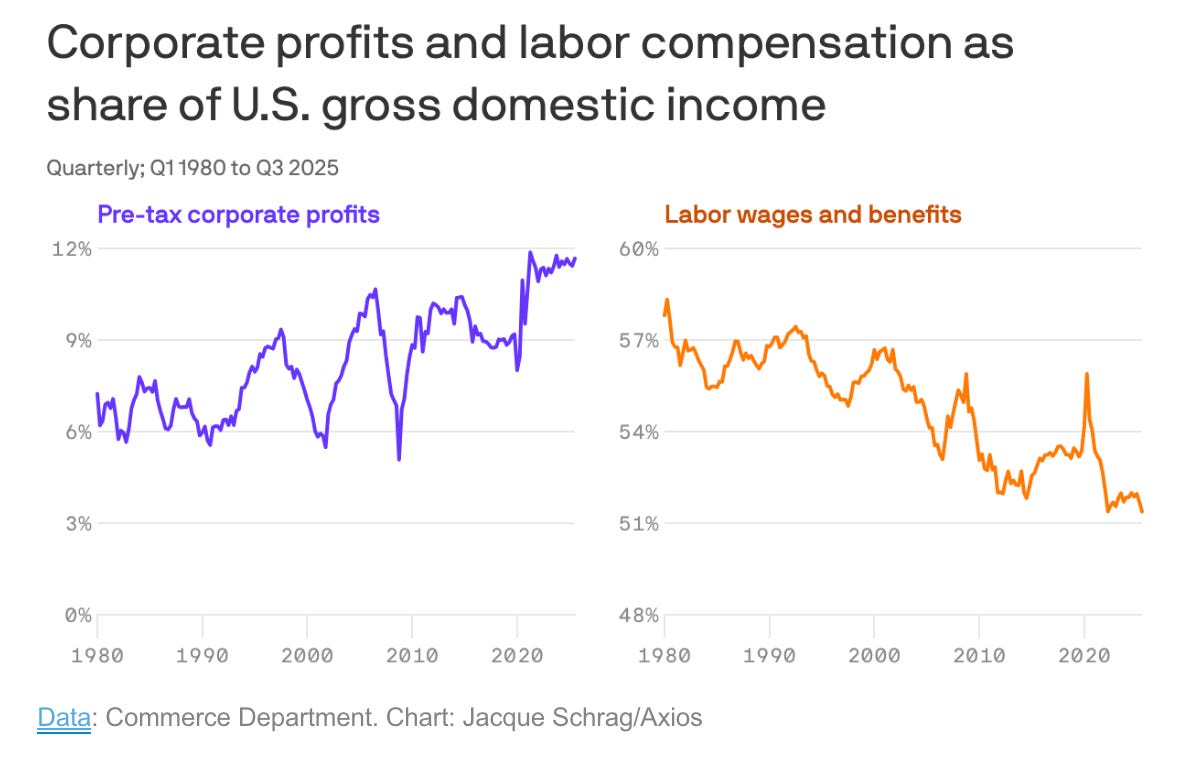

I worry a lot about how AI drives a wedge between the classes. Not because I think there will be mass layoffs, as Anthropic’s Dario Amodei keeps predicting, but because the capital markets will boom while the salaried and hourly workers struggle. This chart should alarm anyone:

We can see the effects already in how much the public hates AI. TikTok erupted this week over Super Bowl commercials, calling them dystopian: AI companies; GLP-1s; sports betting. The vitriol toward AI is more pronounced than any anti-tech sentiment I’ve seen in my lifetime.

I’m not sure Silicon Valley is really grasping the extent of the AI backlash. People are being left behind, and that’s the biggest barrier between capability and adoption.

Thanks for reading! Subscribe here to receive Digital Native in your inbox each week: