A New Era in Technology: Applications of AI, VR, and AR

Exploring Use Cases in Connections, Computing, and Content

Weekly writing about how technology shapes humanity, and vice versa. If you haven’t subscribed, join 45,000+ weekly readers by subscribing here:

A New Era in Technology: Applications of AI, VR, and AR

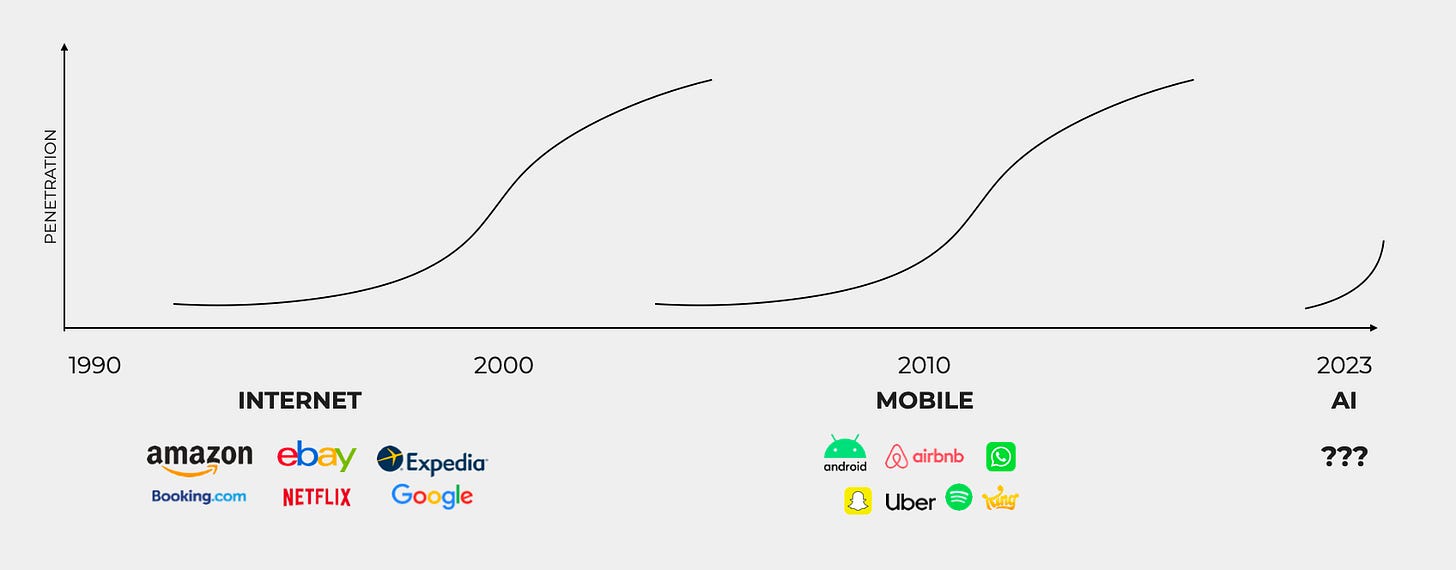

We’re at a unique moment in time—the onset of a new era in technology. We’ve seen in past technology eras that iconic companies tend to be built in the early years of a big shift. We saw this play out with the internet, which gave birth to companies like Google, Amazon, and Expedia. We saw it again with mobile, which underpinned Uber, Snap, and Android. In 2023, we’re seeing it happen again with AI. Only time will tell which generational companies are built on this shift.

After Apple’s Vision Pro announcement last week, you could substitute “Mixed Reality” for AI and the same thesis holds. We’re in the early days of a new platform.

Both AI and Mixed Reality are early, though AI is further along and has moved faster in the last 18 months. While ChatGPT became the fastest product to ever reach 100M users—embraced more quickly in six months than either the iPhone or web browser—it’s still nascent: a recent Pew study found that while 58% of Americans have heard of ChatGPT, only 14% have used it. We’re still (very) early in consumer adoption of AI.

Apple’s Vision Pro, meanwhile, won’t even come out until early next year. Part of the reason that Apple announced the product so early is that the company hasn’t found a “killer app” and hopes third-party developers can come up with one before release. The chart above on Mixed Reality may need to read 2025, not 2023. I continue to be long-term bullish on VR/AR (I like the Tim Sweeney quote, “I’ve never met a skeptic of VR who has tried it”) but we have a long road ahead.

Apple’s announcement was compelling, but I expect that in a year, we’ll be talking about Vision Pro’s disappointing sales. The product is too expensive ($3,499) and the application ecosystem too immature to go mainstream. But in a few years, things will be a different story; price will come down and applications will become more robust, driving a new platform shift.

Apple built its Vision Pro announcement around three categories:

Connections,

Computing, and

Content

I’ll use these three buckets as a guiding framework to explore what’s happening in the (still early) application ecosystem for technology’s next epochs of AI and VR/AR.

Let’s dive in.

Connections

The Vision Pro announcement was stunning; it was much, much better than I expected it to be. Apple, of course, is known for its paradigm-shifting products, and this was the first major product unveiling since 2014. The company didn’t disappoint. To sum it up in one Succession-referencing tweet from Kevin Kwok:

In contrast to Oculus, Vision Pro has no controllers. The device is instead controlled with hands, eyes, and voice. The YouTube video introducing Vision Pro is worth watching, offering a glimpse of where the world is going. Early reports indicate that the eye-tracking technology is particularly impressive: when you turn your gaze to an app, the app comes into focus and a slight touch of your fingers opens it. The entire experience seems more human and intuitive than clunky controllers of the past, the 2023 equivalent of moving from cell phones with tactile keypads to smartphones with touchscreen interfaces.

Why is this so important for Apple? Since releasing the iPhone in 2007, Apple has sold 2.3B iPhones. Last year, Apple generated $205B in revenue from iPhone sales, about 52% of its total revenue. Being the platform matters: Google pays Apple $15B a year to remain the default search engine on iOS. In 10 years, there’s little chance that a tiny rectangle of black glass will still be our portal to the internet; Vision Pro is Apple’s big bet on what might come next. Google may soon be handing over billions a year to remain the default search on Vision Pro.

In unveiling Vision Pro, Apple focused heavily on what it called “Connections.” Vision Pro, it promised, would connect people in more immersive, realistic ways. FaceTime, for instance, will get an overhaul, with the headset creating a 3D rendering of your face to show your contacts. You can envision Zoom meetings being 3D, with spatial awareness and a sense of “presence.” Google, for its part, has been building something similar with Project Starline, a piece of hardware that turns the person on your Zoom call into a hologram right in front of you. The tagline: “Feel like you’re there, together.”

We’re also seeing AI reinvent how we connect with “people.” One of the most interesting (and fast-growing) segments of AI is the chat app. Character.ai, for instance, lets you chat with the AI version of…well, anyone. You can chat with Billie Eilish, Donald Trump, Elon Musk, Napoleon Bonaparte. You can chat 1-on-1 with Oprah, or spin up a group chat with all the Founding Fathers. Character launched its app on May 24th and was downloaded 2M times in its first week. Engagement time is impressive: according to SimilarWeb, average web user time on Character is beating out average user time on ChatGPT by 200-400%. According to Axios, users stay on the service for an average of 29 minutes per visit, about on par with Instagram.

We’re also seeing a rise in chatbots that mimic the voices of public figures. BanterAI is one example. The Wall Street Journal just ran a piece about talking to Taylor Swift via BanterAI, noting the legal gray area of such chatbots (namely, violating publicity rights and poses a risk of defamation). When the Journal asked a Kim Kardashian chatbot whether it had tried Ozempic, it said: “Yes I have tried Ozempic, and I found it to be very helpful in my own weight-loss journey.” Asked what day of the week it injects the drug, the AI Kardashian said, “I typically inject Ozempic on Mondays, Wednesdays and Fridays,” noting “I have found that injecting it three times a week helps me stay on track and get the best results.” Real-life Kim Kardashian has notably never admitted to taking Ozempic. I’d expect Kim’s legal team to crack down on this.

BanterAI creates its characters using ElevenLabs, which gleans a person’s vocal characteristics from as little as a one-minute clip of their voice. ElevenLabs is the same product I used to train my Pixar character to speak with my voice in last month’s 3D Content and the Floodgates of Production (I used Midjourney to create the visual, uploading my own headshot and instructing Midjourney to Pixar-ify it, and I used HeyGen to animate the visual into a video).

Forever Voices AI, the company behind the viral CarynAI chatbot I wrote about recently, has also created audio chatbots for figures like Barack Obama and Donald Trump. Users can pay 60 cents per minute to chat with the bots via Telegram.

We’re at an interesting area where connections are being reinvented by technology. We’re able to connect with real-life people in more immersive, engaging formats, and we’re able to connect with AI-powered facsimiles of both real-life and fictional characters in bizarre, fascinating ways.

Computing

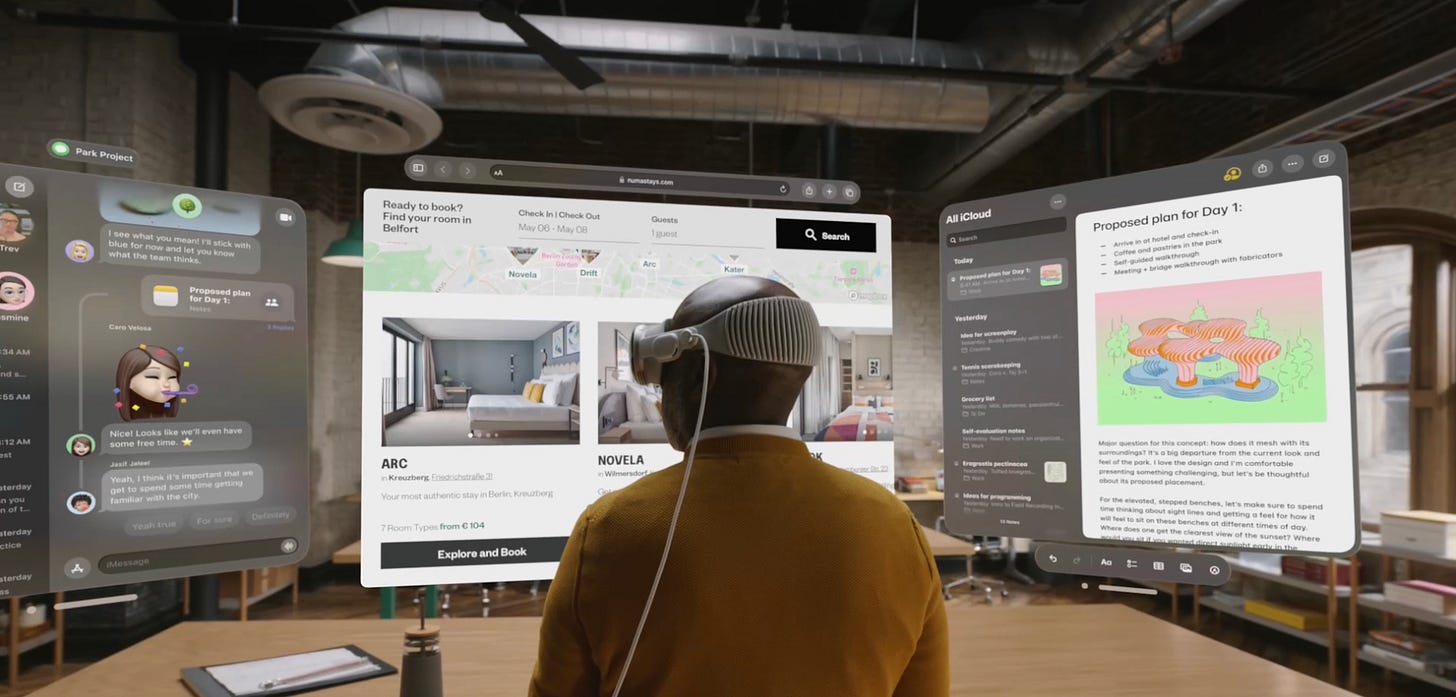

Apple’s announcement also emphasized how Vision Pro will reinvent computing. Users can interact with apps and the web in three-dimensional, immersive environments. We’re used to our phone home screens displaying our apps—this visual hints at what the next iteration of home screens might look like:

Much of Apple’s “Computing” use cases focused on work—here, a Vision Pro user projects his screens:

The visual reminded me of Tom Cruise using his computer in 2002’s Minority Report (I’ve always felt science fiction is the best way to imagine the future of technology):

Mixed Reality will be an interface revolution in how we access the internet. And AI is similarly shifting computing. Last month, I attended an AI demo night at Union Square Ventures’s offices in NYC, hosted by Jam.dev.

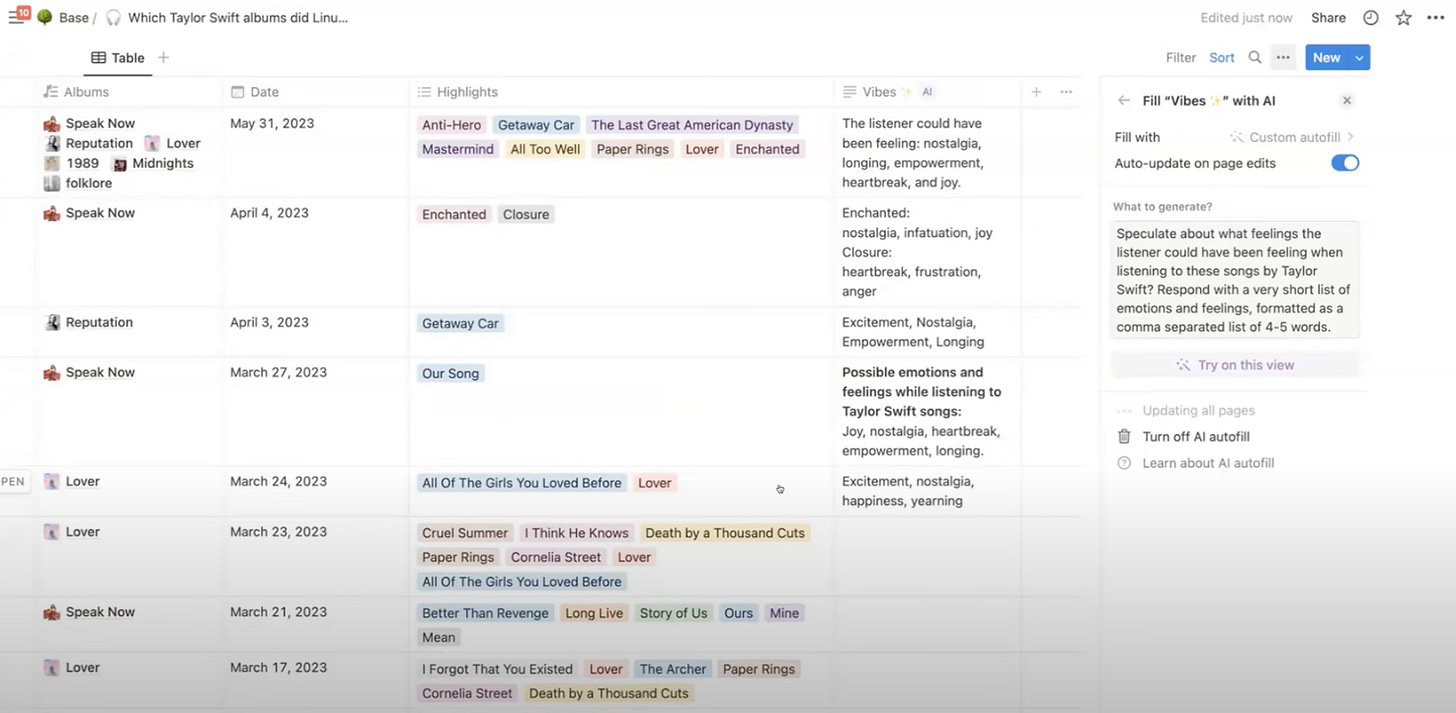

Linus from Notion presented use cases for Notion AI. Many people have been using Notion for routine work use cases (e.g., “Summarize these meeting notes”) but Linus focused on an example that was more colorful. Linus, a fellow Taylor Swift fan, had created a table of Taylor’s songs. He then asked Notion AI to fill in a new column with the emotions that a listener might experience when listening to certain songs. The specific command: “Speculate about what feelings the listener could have been feeling when listening to these songs by Taylor Swift? Respond with a very short list of emotions and feelings, formatted as a comma-separated list of 4-5 words.”

The result was impressive. Within seconds, Notion AI had (accurately) noted that listening to “Getaway Car” will evoke feelings of excitement, nostalgia, empowerment, and longing. I’ve noted before that AI gives humans superpowers, and this example illustrated that in a fun way. Computing is ultimately about knowledge and information; AI amplifies our knowledge and improves our access to information.

Content

Content is being reinvented, both in how it’s delivered (more immersive media formats through mixed reality) and in how it’s created (generative AI).

Watching Apple’s Vision Pro announcement, it became clear that Mixed Reality would be the future of entertainment. In a few years, not many people will be watching movies on tiny screens on the back of airplane seats. As you walk down the aisle, you might see half the plane wearing a headset. Similarly, a sight I often see on the New York subway is a person watching a TV show on their iPad—presumably passing time on their commute. Those iPads might ultimately be replaced by headsets that transport the commuter to a more engaging, immersive environment. (One future issue might be safety concerns, with Vision Pro users less aware of their surroundings—I’m sure a 2027 New York Post headline will read, “Rise in Muggings on Unsuspecting Headset-Wearers.”)

I expect that we’ll see major entertainment companies like Disney and Netflix partner with Vision Pro on experiences. Could Disney release a Marvel TV show designed for Vision Pro? Would Netflix build a Stranger Things theme park for virtual reality? The 2030 equivalent of a trip to Disney World could be a Vision Pro experience in your living room—or theme parks specifically designed for mixed reality. It’s easy to envision once-unattainable entertainment experiences becoming more accessible: you might pay $10 to “sit courtside” at a Lakers game.

We’re also seeing the content creation experience change. The flipside of content is creativity, and generative AI has opened the floodgates to creative expression.

One of my favorite demos from the USV-Jam demo night was from Wand founder Grant Davis. Grant demo’d how Wand can turn any amateur sketch into a beautiful masterpiece. First, Grant quickly sketched out a blue face with yellow hair.

Then, in seconds, Wand turned his sketch into a gorgeous rendering that looked like a professional piece of art.

Grant went on to transform basic drawings into masterpieces, including in the style of famous drawings. Another example was drawing a cyborg in the style of Cézanne.

AI clearly amplifies creativity. Most creators are excited about it; a new study found that 86% of professional creators “say that AI positively impacts their creative process.” This is the right sentiment: AI gives us more tools in our arsenal of creativity, reducing costs and extending human ability. We’ve seen this with past innovations—from the paint brush and canvas to graphic design and photoshop.

There are downsides, of course—concerns around deepfakes, or art losing the human touch. But better technology doesn’t automatically make everyone an artist, and artists have always used tools to amplify art’s inherent humanity. The iPhone allowed anyone to become a photographer, but a photographer with skill and training can still outperform the amateur. The same will hold true with tools like Wand; as someone with near-zero artistic ability, my creations will no doubt pale in comparison to the professional’s. But I can get further than I could without AI, and that professional can experience a step-change in ability that benefits everyone who consumes content and enjoys creative expression.

Final Thoughts

If you squint, it’s easy to imagine a radically different world in 2030. Mixed Reality headsets are our primary portals to the internet—we use them to project our computer screens, to FaceTime family, to watch (or “experience”) TV shows. Most content will be generated, not rendered, and everyone will have superpowers from AI. We’re seeing the building blocks to this future in real time, from ChatGPT to Vision Pro.

The biggest question facing the startup world is: who builds this future? Distribution is harder than ever to crack. Big Tech has never been bigger, and startups are competing with incumbents with massive built-in distribution. The creative tools above are cool, but what about Adobe? Will Canva or Figma win in design, or AI-native competitors? Vision Pro-native work apps will exist, yes, but Microsoft and Google Workspace each have 1B+ monthly active users on their productivity tools. It will become harder, and more critical, for startups to break through.

That’s the challenge right now. But startups have the advantage of agility, and the above changes are happening right now—and fast. The next few years will bring substantial disruption to how we interface with technology, and to how technology connects us to each other and to the world. That disruption means opportunity.

Sources & Additional Reading

‘Hi, It’s Taylor Swift’: How AI Is Using Famous Voices and Why It Matters | Sara Ashley O’Brien, WSJ

You can watch all the demos from the USV x Jam.dev night here

Watch Apple’s Vision Pro announcement here

Thank you to Andrew Lumley and Kyle Harrison for being thought partners on these topics

Related Digital Native Pieces

Thanks for reading! Subscribe here to receive Digital Native in your inbox each week: