The AI State of the Union

What's Happening in AI's Budding Application Layer

Weekly writing about how technology shapes humanity, and vice versa—always through a lens of optimism. If you haven’t subscribed, join 40,000+ weekly readers by subscribing here:

The AI State of the Union

Hey Everyone 👋 ,

Over the past two weeks, I wrote a two-part “State of the Union” on AI’s budding application layer. Here, I’m combining Part I and Part II into a single deep-dive.

Things are moving fast. Predictions and market maps from January seem quaint. In March alone, OpenAI launched GPT-4, ChatGPT got plugins, and Bill Gates declared this “The Age of AI,” arguing that the development of AI is as fundamental as the creation of the personal computer, the internet, and the mobile phone.

I’ve structured this piece to look at three themes that excite me:

Personal Assistants for Everyone

Amplifying Human Knowledge

Amplifying Human Creativity

Then, we examine three important questions that stem from the AI revolution:

Will AI take our jobs?

What is reality in a world of generative AI?

Who will capture the value being created?

Let’s dive in.

Introduction: Accelerating Innovation

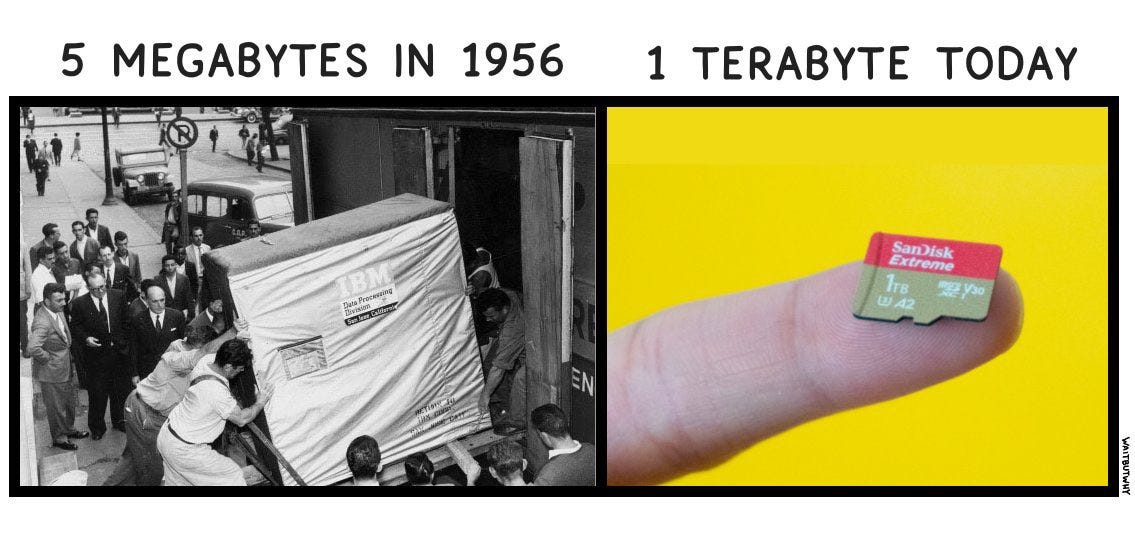

The pace of technological advancement is staggering. I’ve always liked this visualization: 5 megabytes in 1956 vs. 1 terabyte today. For context, 1 terabyte = 1,000,000 megabytes. A terabyte hard drive in 1956 would’ve been the size of a 40-story building; today, it fits on your fingertip.

Or take another example of technology’s progress: within a single lifetime—63 years—we went from the Wright Brothers’ first flight (1903) to putting a man on the moon (1969), 239,000 miles from Earth.

To connect these two examples: the iPhone in your pocket is exponentially more powerful than the computer NASA used to send astronauts to the moon. NASA’s Apollo 11 computers cost $3.5M apiece and were the size of a car. Today’s iPhone can process 3.36 billion instructions per second and could be used to guide 120,000,000 Apollo-era spacecraft to the moon, all at the same time.

(By the way, the TI-84 calculator you used in AP Calculus is 350 times faster than Apollo computers and has 32x more RAM and 14,500x more ROM.)

The point is: things are moving fast. And the pace of advancement carries over to AI. Ten years ago, AI struggled to tell the difference between a cat and a dog. Today, AI can not only classify, but generate detailed images of cats and dogs from a text prompt. Models are improving rapidly. Here’s a side-by-side of Midjourney’s output to the same prompt—“Barack Obama and Donald Trump playing basketball”—from 12 months ago vs. today:

People are using AI to write code and to design their apartments, to draft 4,000-word essays and to craft poetry. A new peer-reviewed study found that AI can now diagnose diseases better than 72% of general practitioner doctors.

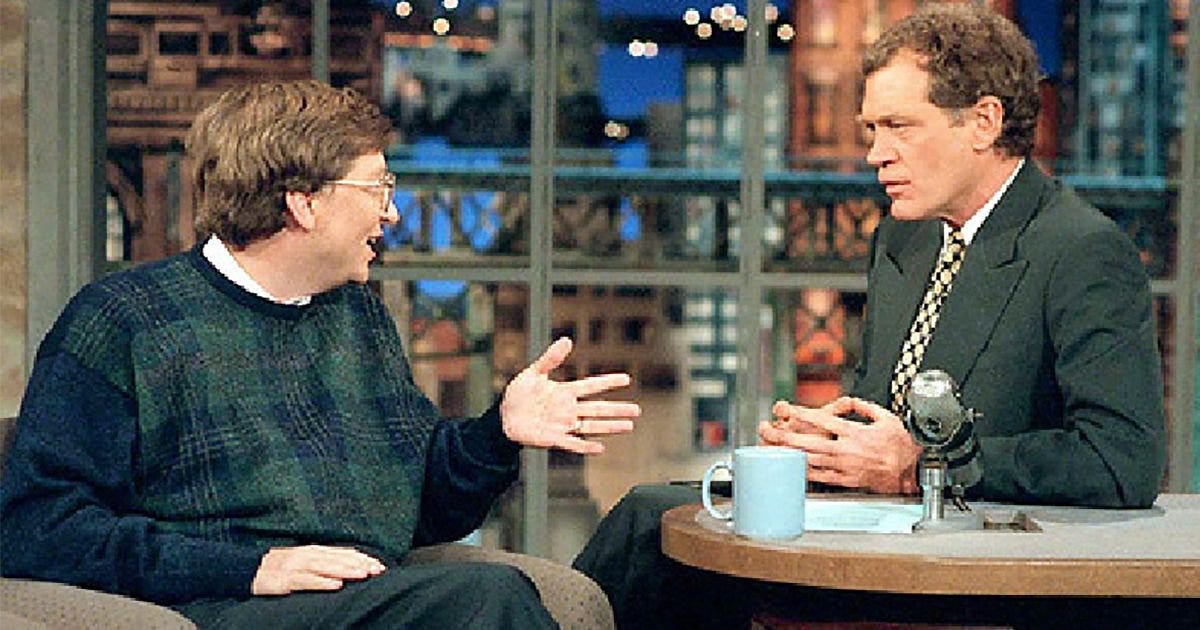

Hearing Bill Gates proclaim a new tech epoch reminded me of another time he professed excitement for a budding technology—the internet. In 1995, Gates went on the The David Letterman Show to explain the web. That interview was met with ridicule (you can watch it on YouTube here), with Letterman giving wry responses to Gates’s excitement about streaming a baseball game (“Does radio ring a bell?”) or being able to experience that game online even after it’s over (“Do tape recorders ring a bell?”). He just didn’t get it.

This time is different. People are paying attention, if only because life has changed so dramatically in the last half-century. When my dad was growing up, TV had three channels and he had to look up information in a leather-bound encyclopedia. Since Gates appeared on Letterman, we’ve become accustomed to Google’s Search and Amazon’s Everything Store and Facebook’s social graph. Tech companies have become verbs that dictate our lives—we Instagram special moments, Snap our friends, Uber to work, Airbnb when we travel.

The pace of change has been rapid, but we’re only on the cusp of the digital revolution. Computers used to be really good at one thing: computing. In other words, doing calculations. Now they’re becoming good at learning (hence the term “machine learning”), operating more like the human brain. And computers are capable of moving much, much faster than our brains—an electrical signal in the brain moves at 1/100,000th the speed of the signal in a silicon chip. We’re seeing a Cambrian explosion in the startup world: about 1 in 2 startups in Y Combinator’s Winter 2023 batch is building a product that uses OpenAI’s APIs.

Let’s start with three themes I’m excited about in AI applications:

Personal Assistants for Everyone

Amplifying Human Knowledge

Amplifying Human Creativity

Personal Assistants for Everyone

Back in January, I wrote about the obscure 90s film Hyperland, created by Douglas Adams of Hitchhiker’s Guide to the Galaxy fame. The premise of Hyperland (which you can watch on YouTube here) is that Adams is fed up by passive linear TV—what the film calls “the sort of television that just happens at you, that you just sit in front of like a couch potato.”

Seeking a more interactive form of media, Adams takes his TV to a dump, where he meets Tom (played by Tom Baker). Tom is a “software agent”—essentially, a digital butler capable of personalizing your life and carrying out tasks for you.

Tom reminds me of modern-day AI.

Last week, OpenAI unveiled ChatGPT plugins. Plugins essentially mean that ChatGPT users can now interact with 11 launch partners—including Instacart, Expedia, and OpenTable—all from the ChatGPT interface. Like Tom, ChatGPT plugins act like digital butlers.

How plugins work is best illustrated with an example:

Ben Thompson asked ChatGPT for a recipe that includes pork and cabbage. You can see in this screenshot that at the end of ChatGPT’s response, ChatGPT asks Ben if he’d like a shopping list for the recipe. Ben says yes, and ChatGPT spits out an Instacart shopping cart with all the requisite ingredients.

This, of course, uses Instacart’s plugin. But you have other options too: with plugins, ChatGPT can book you a restaurant (OpenTable), buy you a product (Shopify), or stitch together apps (Zapier). Everyone is getting their own personal assistant.

Imagine giving this prompt to ChatGPT:

“I’m going to Paris for seven nights. Please book me flights that leave in the morning on March 30th and get me home in time for dinner on April 6th. I’d like to stay by the Louvre, no more than $500 per night, and would like dinner reservations each night within walking distance to the hotel.”

No more laborious, monotonous interaction with Expedia and Google Flights and OpenTable. OTAs like Priceline and Expedia reconfigured travel in the 2000s, rendering travel agents obsolete; AI models could do something similar to today’s industry (though currently the bookings still go through OTAs like Expedia that have partnered with OpenAI).

What’s also new about plugins is that they let ChatGPT connect to the internet. Previously, ChatGPT was limited by the dataset it had been trained on, causing it to omit events past 2021. For a while, for instance, ChatGPT didn’t know that Elon Musk is CEO of Twitter. Now, ChatGPT can access the web’s information in real time and offer up-to-date responses. That’s big.

When I think about AI applications, I think of assistants and I think of amplifiers. The lines between the two are blurry, but this framework helps me think about use cases:

Amplifiers are about augmenting human abilities; they give us superpowers that make us superhuman. More on amplifiers in the next two sections.

Assistants are less about augmenting humans and more about saving us time and effort by completing tasks for us. Assistants are the Toms, the digital butlers. Or to use a more modern reference—and to continue with the superpower analogy—assistants are J.A.R.V.I.S. from Iron Man, the computer companion that serves Tony Stark. We all want to be superheroes, and AI helps us get there, but we also need our trusty digital sidekick to make our lives easier. With AI, we now all get our very own J.A.R.V.I.S. (fun fact: the acronym stands for Just A Rather Very Intelligent System).

We see personal assistants in ChatGPT plugins booking our hotels and ordering us groceries. We also see them in other exciting new startups; Harvey, for instance, acts as a personal assistant for lawyers, helping with contract analysis, due diligence, litigation, and regulatory compliance. It’s like having your own paralegal, at a fraction of the cost. This is an interesting trend, and we’ll see more verticalized assistants pop up. Next week, we’ll explore what this means for labor market disruption—paralegals, for instance, could be among the jobs at risk.

Another reason plugins are so fascinating: they signal ChatGPT becoming a platform. Soon, thousands of companies might partner with OpenAI. Packy McCormick and Ben Thompson had some good thoughts this week on what this might look like. Many smart people have compared this emergent platform to Apple’s App Store.

Some thoughts on how this might unfold:

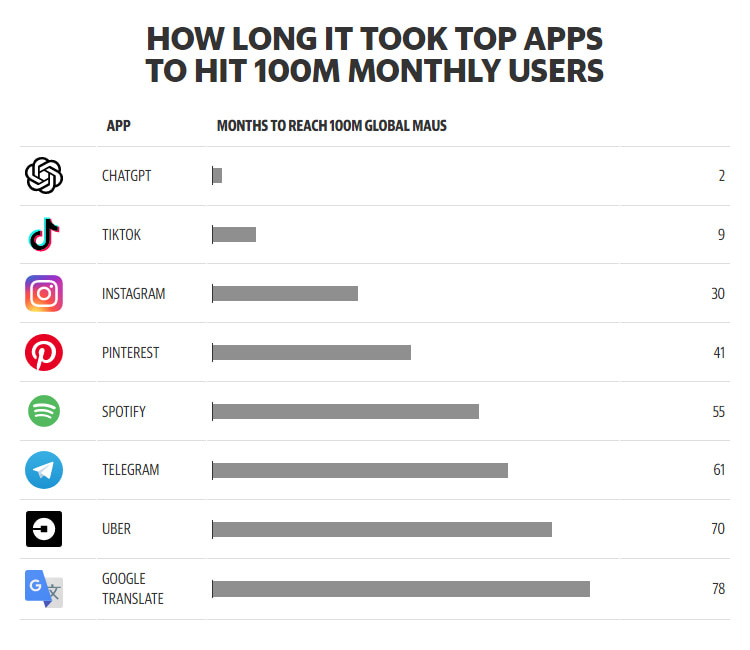

When ChatGPT launched, I expected its growth to be gated by distribution. ChatGPT doesn’t even have an app; users are accessing the tool via the web. But this clearly hasn’t stood in the way of ChatGPT becoming arguably the fastest-growing product in history, taking two months to hit 100M users.

My TikTok feed demonstrates ChatGPT’s mainstream appeal: video after video and comment after comment feature people talking about using ChatGPT to do their homework, to sketch out their lesson plans, to write their emails. It’s a certified phenomenon.

What’s surprised me is that ChatGPT is becoming a new interface, which plugins solidifies. I’d thought that existing distribution would win—Snap, for instance, integrating ChatGPT into its My AI feature for 750M monthly active users. But instead, people are interacting with businesses through ChatGPT itself.

It will be fascinating to see how businesses adapt themselves to this new reality. When Google Search became dominant in the 2000s, businesses optimized their websites with keywords for SEO. When Instagram became dominant in the 2010s, businesses optimized their cafes and restaurants and hotels for Instagrammable photos. What happens in the 2020s when every company needs to make sure that it incorporates generative AI? How does Uber vie with Lyft for riders when a user asks ChatGPT the simple command, “Find me a ride to the airport?”

Depending how plugins take off, ChatGPT may be the interface through which users interact with sites we used to visit directly or with apps we used to download—Expedia.com, OpenTable.com, Instacart’s mobile app. We’re seeing a massive shift in the user experience, and plugins were the shot across the bow—if I were Google with Chrome or Apple with its App Store, I’d be thinking carefully about my next move.

Amplifying Human Knowledge

Ruminating on the rise of AI, Bill Gates explains that there have been two defining technology experiences of his life:

The first came in 1980, when Gates first used a graphical user interface. A GUI is how you and I interact with computers—the pointers, icons, windows, scroll bars, and menus that we’re all accustomed to on our screens. It’s hard to imagine now, but before GUIs you had to input a C:> prompt to interact with a computer.

The second defining experience for Gates came just last year, when the OpenAI team demonstrated how ChatGPT could ace the AP Bio exam. Gates had challenged the team to the bio exam for a specific reason: “I picked AP Bio because the test is more than a simple regurgitation of scientific facts—it asks you to think critically about biology.” But the AI model aced the test anyway, getting 59 of 60 multiple-choice questions correct and producing top-tier responses to the essay questions. An outside expert scored the test, giving it a 5, the highest-possible score.

AI will make us smarter. Technology has made us better at math for decades—calculators, Excel spreadsheets, computer programs. We get computational superpowers. Think of the same analogy, but applied to all human knowledge. We see this in emergent startups like Hebbia (neural search for the enterprise), Rewind (“The Search Engine for Your Life”—essentially a better memory), and Kumo (predictive intelligence) that expand our brainpower and our human abilities. We see this in established productivity tools like Notion and Google Workspace that are integrating generative AI, letting you summarize meeting notes and write thoughtful emails.

ChatGPT’s laurels continue to pile up: ChatGPT passed an MBA exam at Wharton; ChatGPT took the bar and passed; ChatGPT passed medical licensing exams.

And AI model abilities will only improve. One fascinating chart to end on—how GPT-4 compares to GPT-3.5 on exam performance.

In just a short time period, we’ve leapt from 40th-percentile to 88th-percentile on the LSAT and 20th-percentile to 70th-percentile on AP Chemistry.

Amplifying Human Creativity

There a few laws that govern the universe. One such law: as people approach age 30, their interest in interior design grows exponentially. I’m no exception: my Instagram Explore Feed is littered with home decor content, and my YouTube homepage is almost exclusively Architectural Digest home tours.

Recently, I turned to Midjourney to get design inspiration for our apartment. As someone with precisely zero artistic talent, I was actually fairly successful. Here’s the output for the prompt:

Architectural Digest Style Photo, New York apartment, Contemporary, Primary Bedroom, Fireplace, Dark-Patterned Wallpaper, Animal Print, Leather, Black Rug, Restoration Hardware, Yellow Lighting, Nighttime, Cozy Vibe, Unique --ar 16:9

This is what’s so exciting about generative AI; it can make anyone (even me!) more creative. Artificial intelligence 🤝 artistic intelligence.

When you see the fidelity of generated images, your mind starts to think about the possibilities. In a talk with Sequoia last week, NVIDIA’s CEO Jensen Huang said: “Every single pixel will be generated soon. Not rendered: generated.” It’s interesting to think of this applied to rich media formats like film and gaming. We can see the improvement in Midjourney’s creations already (think back to the Obama v. Trump basketball image earlier), and the steep slope will only continue: soon, we’ll generate rich video and immersive 3D worlds.

An example of Midjourney v5 from Nick St. Pierre reminds me of HBO’s White Lotus. It’s the output for the prompt:

1960s street style photo of a crowd of young women standing on a sailboat, wearing dior dresses made of silk, pearl necklaces, sunset over the ocean, shot on Agfa Vista 200, 4k --ar 16:9

Imagine having an idea for a story, and being able to generate an entire TV show off of your script. One of the broad themes in Digital Native is that the arc of creative tools bends toward more affordable, accessible, and high-quality creation. Generative AI could make it possible for anyone to become a showrunner. This could, of course, have a major impact on jobs; we’ll unpack that next week.

We’re already seeing flavors of user-generated generated content (UGGC?). Last week, Runway presented the 10 finalists in its festival for AI-generated short films. You can watch the 10 videos here. The films are impressive and engaging (and you’ll see in the credits that they still employ plenty of people). It’s interesting to think about next-gen content platforms, social networks, and games that incorporate generative AI—your 2023 versions of Minecraft or Roblox or Rec Room.

One final example from St. Pierre of Midjourney v5 being used creatively—product design. Here’s the output for the mock-up of a Nike Air Force 1 and Slytherin collab. The prompt:

Street style photo, Closeup shot, Nike Air Force 1 slytherin collab, unique Colorway, snake skin, hogwarts, natural lighting, original, unique, 4k --ar 16:9

Pretty slick. Nike’s current customization tools are rudimentary, but that’s bound to change; you can imagine generative AI expanding who can be a designer. Just in the past few weeks, both Adobe and Canva launched AI products: Adobe unveiled Firefly, which includes a text-to-image generator, and Canva launched Magic Design, which creates design templates based on your inputs. One open question is who will capture the value being created here: incumbents like Adobe and Canva, or the startups being founded today. We’ll explore that more next week.

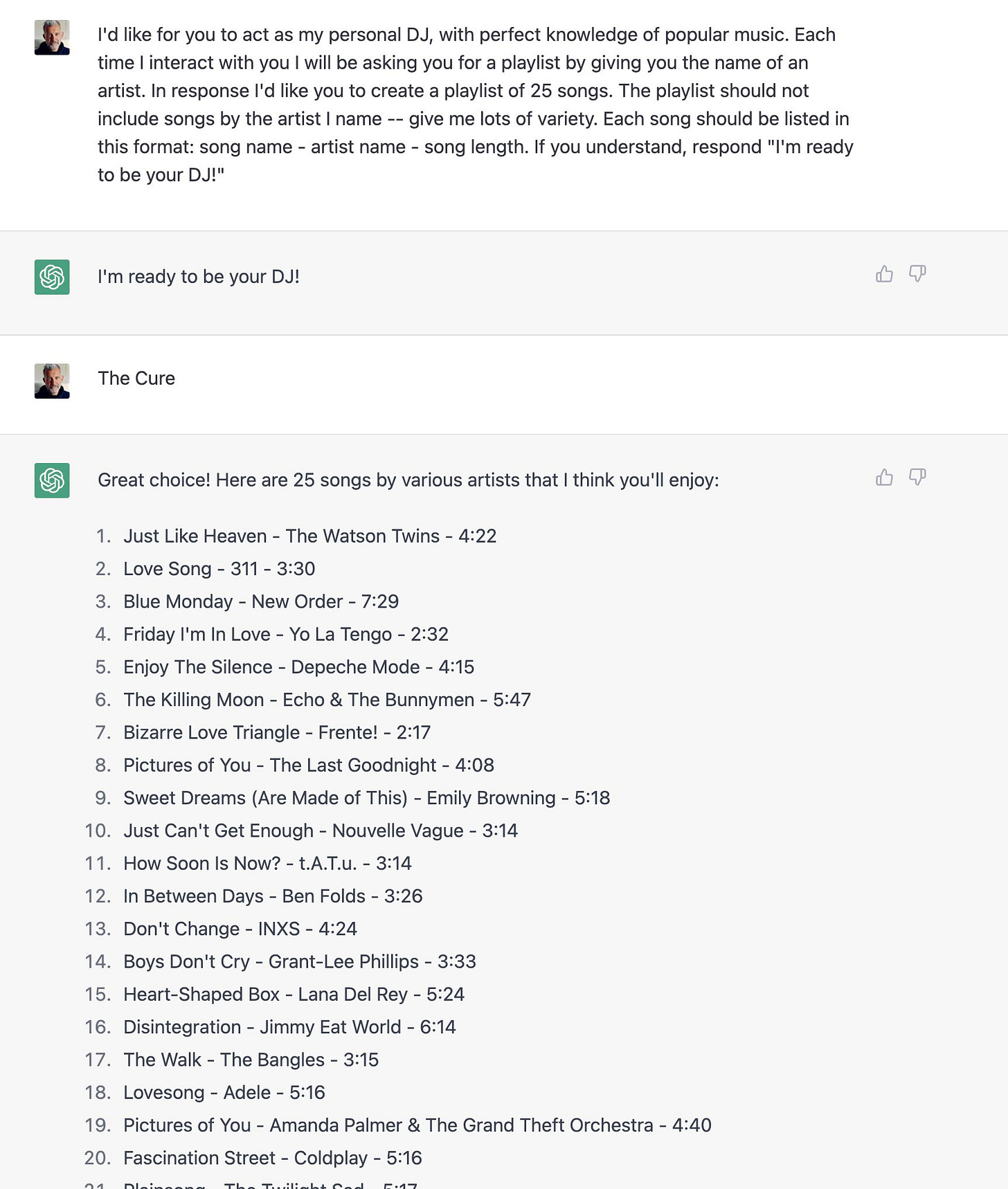

People are finding innovative ways to use AI in their creative work. The YouTuber MKBHD (17M subs) has been using ChatGPT to write his video scripts; Isaiah Photo (9M subs) let AI run his channel for a week; and people are even using ChatGPT for DJ sets:

There are some dystopian elements to AI’s creative side, of course; at what point does AI stop augmenting our creativity and start negating it? But AI is certainly proving that it’s capable of human-level creativity. In one study, researchers compared human-generated ideas with AI-generated ideas across six chatbots, reporting: “We found no qualitative difference between AI and human-generated creativity.” (!)

When it comes to creative work, it’s interesting to ponder how new technologies shape art. My friend Trung Phan recently wrote about how photography changed painting. In short, before cameras came along, most painting was hyper-realistic; artists sought to capture reality. But when photography became possible, artists no longer felt the need to mimic the real world, instead feeling free to paint abstract and creative works. Monet, for instance, used colors in his famous water lilies that didn’t exist in nature. And Van Gogh’s Starry Night was groundbreaking in how it didn’t represent the realistic night sky, but instead captured Van Gogh’s own interpretation of it.

It will be fascinating to see how generative AI expands our definition of art. Just as cameras changed painting, generative AI will change writing and music and film and design. The technology is bound to be a controversial, transformative tool in the arsenal of creatives. It will influence the art we make and the stories we tell in ways good and bad, all while crowding in more creation by making the act of creativity more accessible to more people.

Part II: Exploring AI's Impact on Society

In The Atlantic last month, Jacob Stern debated which technology AI most resembles in terms of its impact on society. The best analogy, in my mind, is electricity. As Stern points out, many “technologies” are fairly limited tools: a saw for cutting, a pen for writing, a hammer for pounding nails. Electricity, meanwhile, has no specific function, acting less as a tool than as a force that pervades all aspects of life.

We’ll see AI bleed in unexpected ways into every part of life. We’ll all get digital butlers, personal assistants that make mundane life tasks easier; we’ll have our knowledge meaningfully expanded (again, a silicon chip’s signal moves 100,000 times faster than our brains); and we’ll become more creative, unlocking new artistic ability. Put more simply: we’ll get superpowers, and we’ll get a trusty sidekick too.

When technological innovations happens so rapidly, it brings negative externalities in addition to the positive. The pace of AI has led some, including Elon Musk and Apple co-founder Steve Wozniak, to publicly call for a pause in training models more powerful than GPT-4. A good Times piece on OpenAI’s Sam Altman summarized the controversy: “Some believe [AI] will deliver a utopia where everyone has all the time and money ever needed. Others believe it could destroy humanity.”

The reality, in my mind, is that AI will neither deliver utopia nor destroy humanity. The future instead lies somewhere in the murkiness between those poles. What fascinates me are the societal ripple effects that come from technological advancement. Three timely questions about AI:

The first question: is AI coming for our jobs?

What’s interesting about the AI revolution is that white-collar jobs are the jobs most at risk. The Industrial Revolution disrupted blue-collar work, but many people believed that knowledge work would always be safe; after all, how could a machine replicate human creativity? New reports from OpenAI and Goldman Sachs are sobering: OpenAI estimates that 80% of the U.S. workforce will be impacted by AI, and Goldman predicts 300M jobs are at risk of automation. Below, we’ll examine how things might play out.

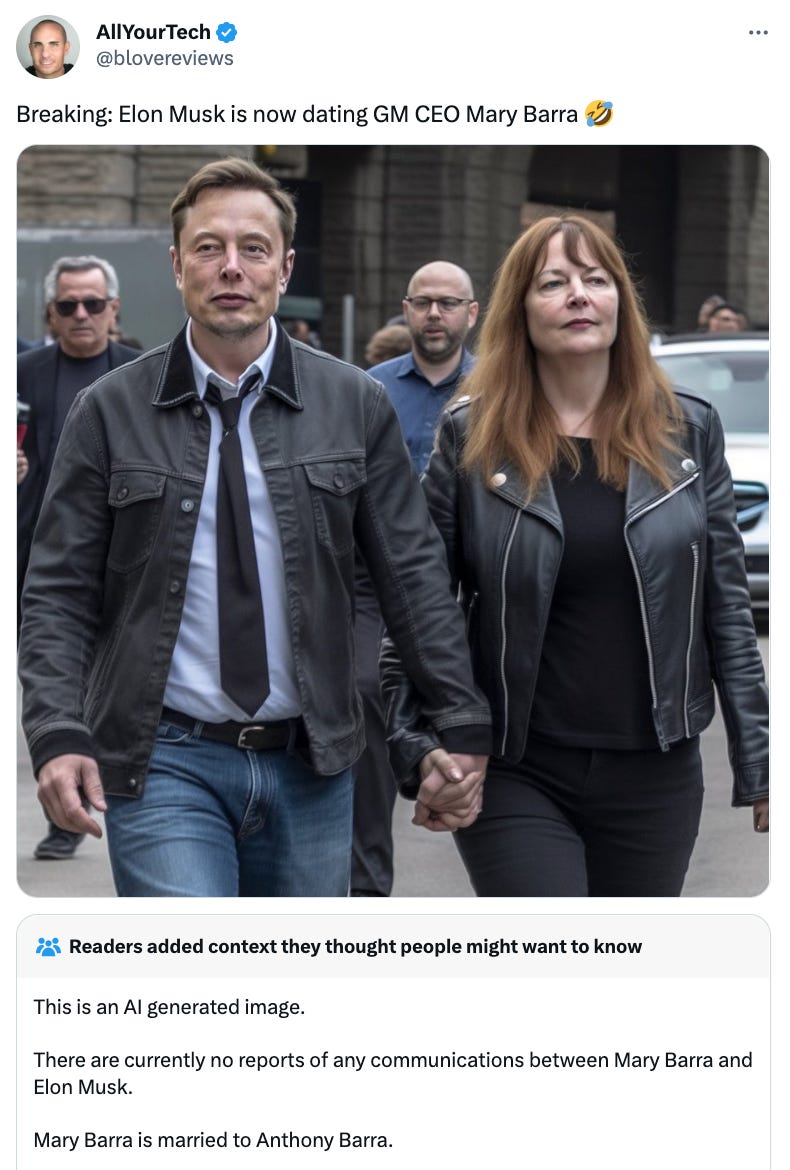

The second question: what is a reality in a world of generative AI?

The Pope went viral last week for wearing a stylish, puffy white jacket. The only problem: the image was completely fake. Buzzfeed News called it “the first real mass-level AI misinformation case” and tracked down the guy who made the image, Pablo Xavier from Chicago. In a you-can’t-make-this-stuff up moment, Pablo Xavier revealed that he was tripping on mushrooms when he decided it would be funny to dress the Pope in a Balenciaga puffer coat.

Generative AI blurs the reality between fact and fiction, which will have ripple effects for trust and misinformation in society. Below we’ll unpack that in more detail.

And the third question: where does value accrue in AI?

Goliaths like Microsoft and Google seem poised to reap the economic gains here; do startups stand a chance, and what does defensibility look like in the world of AI?

First up, AI and our jobs 👇

Will AI take our jobs?

Here’s how I see AI’s impact on work unfolding:

Yes, AI will render many jobs moot. Knowledge work will be most affected.

This will happen more slowly than we expect.

Many lost jobs will be offset by new jobs, as has historically been the case.

There will still be near-term pain, and worker retraining is essential.

A utopian future that’s free from labor is a pipe dream. In 20 years, we’ll be working just as much as we are today (sorry!) but with higher productivity.

Let’s unpack this in more detail.

OpenAI’s recent report on jobs—produced in tandem with the University of Pennsylvania—found that 80% of the U.S. workforce could have at least 10% of their tasks affected by the introduction of GPTs, the large language models made by OpenAI. About 19% of workers, meanwhile, will see at least 50% of their tasks impacted by GPT.

In a reversal from the Agricultural and Industrial Revolutions, high-income jobs are most at risk. The more your job touches atoms and not bits, the safer you seem to be: OpenAI and Penn list the industries most at risk to be data processing, information services, publishing, and insurance; the industries expected to be least impacted are food manufacturing, wood product manufacturing, and, ironically, agriculture.

The Goldman report has similar findings. Goldman summarizes:

Using data on occupational tasks in both the US and Europe, we find that roughly two-thirds of current jobs are exposed to some degree of AI automation, and that generative AI could substitute up to one-fourth of current work. Extrapolating our estimates globally suggests that generative AI could expose the equivalent of 300M full-time jobs to automation.

Automation stems from the fact that AI is becoming better than humans at uniquely-human things. The solid lines here show how AI performs against human benchmarks (the dotted lines) of image classification and reading comprehension:

Here’s a visualization of the industries that Goldman expects to be most-impacted. Administrative office work, legal work, and architecture & engineering lead the way.

We’re already seeing startups tackle these areas. To take the top two categories: Xembly is a startup that gives everyone an automated executive assistant to schedule meetings and summarize calls, while Harvey serves lawyers, helping with contract analysis, due diligence, litigation, and regulatory compliance.

For comparison, here’s the extent of automation Goldman expects across industries:

If you work in maintenance, repair, or construction, you’re pretty safe. If you work in admin or legal, you have a ~40% chance of being replaced by AI.

Yet I don’t expect many jobs to be replaced in the near future. Historically, organizations are slow to adopt new technology. They’re skittish and they’re bureaucratic. I see AI acting as a companion rather than a replacement, at least for a few years. Most paralegals can probably breathe a sigh of relief in the short-term; in fact, their jobs might become easier, with the benefit of a powerful sidekick to make work faster and less monotonous.

It will become second nature for us to use AI as a tool in our work, in the same way that using the internet has become second nature. It will become silly for software engineers to not use GitHub Copilot to code faster, for consultants to not ask ChatGPT to brainstorm solutions for problems, for interior designers to not lean on image models to generate mock-ups to show clients.

In the long-term, though, things will be a different story. Many jobs will likely fade away over the next 10 or 20 years. And those physical-world jobs aren’t safe either—robots will automate many of these jobs in the same way that language and image models automate knowledge work and creative work.

Many modern jobs will soon seem like distant memories. We’ve seen the same patterns in past technology epochs. Before there was automatic equipment to reset bowling pins, “Bowling Pin Setter” was an actual occupation. Ditto for “Lamplighter”—Lamplighters walked on average 10 miles per day, lighting lamps before dusk and distinguishing them at dawn. Will jobs like Executive Assistant, Copywriter, or Accountant follow the same extinction?

The silver lining: worker displacement from automation has historically been offset by the creation of new jobs. We’ll see jobs emerge that we can’t even think up yet (“Prompt Engineer” seems to be an early, and lucrative, example). Who would have thought 30 years ago that jobs like Cybersecurity Analyst, Database Administrator, or Machine Learning Engineer would exist? Let alone Social Media Manager, SEO Consultant, or Discord Community Manager?

This being said, there will be significant short-term pain along the way. Worker retraining is crucial. Startups like Guild Education and Outlier have made upskilling more accessible with elegant business models (Guild, for instance, gets employers like Disney, Walmart, and Taco Bell to pay for education as an employee benefit because doing so reduces worker attrition), but we need both more venture-funded solutions and more government-funded policies. Effective retraining in the face of AI disruption will require a remodeled education system, which I’ll tackle in a future piece.

Some people believe that AI will deliver us to a future free from work. In an interview with Semafor this week, Vinod Khosla said: “This large transformation is the opportunity to free humanity from the need to work. People will work when they want to work on what they want to work on.” Unfortunately, I see things playing out quite differently.

In 1930, the economist John Maynard Keynes predicted that his grandkids would work just 15 hours a week. He imagined that by the 21st century, we would work Monday and Tuesday, and then have a five-day weekend. What Keynes missed was that work is as much a product of culture as it is of technology. And work has increasingly become the foundation of culture; as Derek Thompson put it so elegantly: “Here is a history of work in six words: from jobs to careers to callings.” It’s easy to give up a job; it’s harder to give up a calling.

Barring a cultural reckoning around work, I feel strongly that we’ll be working the same number of hours in 2043 as we are in 2023. Human labor used to be about subsistence—farming, to get enough to eat. Now work has moved up Maslow’s Hierarchy to fill our needs for community and self-actualization.

AI will make us more productive, yes. Goldman’s report estimates that AI will eventually increase annual global GDP by 7%. We’ll all get superpowers that make us better at our jobs. We’ll get a lot more out of those 40 hours a week, operating collectively on a higher plain. But I expect that we’ll still be working those 40 hours.

What is reality in a world of generative AI?

My friend Miles Fisher looks a lot like Tom Cruise. People have noted the resemblance for years. But only recently did Miles become Tom Cruise.

Miles is the man behind @DeepTomCruise, a popular TikTok account (5.2M followers) that puts out viral AI-powered videos of Miles embodying Tom Cruise. Here’s Miles before and after overlaying his Tom Cruise deepfake, an effect created by using deep learning to transpose Tom’s face onto Miles’s:

Miles is now behind a company called Metaphysics AI that powers visual effects for film studios. Metaphysics is currently working on de-aging Tom Cruise and Robin Wright for an upcoming Robert Zemeckis (Forrest Gump, Back to the Future) film.

The interplay of generative AI and reality is fascinating to think about.

On the positive side, it’s exciting to think about how this can revolutionize entertainment. Don’t like Robert Downey, Jr.? What if you could watch Avengers with Tom Cruise playing Iron Man instead? Or better yet, what if you and your friends could be The Avengers and watch yourselves fight Thanos?

In this future, we can enjoy modern films that star Marilyn Monroe and experience a Beatles concert in our own living room. As NVIDIA’s CEO Jensen Huang recently said: “Every single pixel will be generated soon. Not rendered: generated.” To use one example of what’s already-possible, last September Metaphysics used a deepfake to have Elvis perform on America’s Got Talent:

This is cool stuff; it can revolutionize entertainment and education. (Imagine being taught about the Emancipation Proclamation by Abraham Lincoln himself.) Startups are already working on this: Character AI lets you chat with a facsimile of virtually anyone, living or dead. Want to talk sonnets with William Shakespeare, or learn about rockets from Elon Musk, or just shoot the shit with Billie Eilish? You can do that.

But generative AI is also a double-edged sword. In a world where anything can be conjured, how do we know what’s real? One worry here is a world brimming with misinformation and void of trust. We used the example of the Pope’s puffer coat earlier; that’s pretty innocuous. But there have been recent examples that were more troubling. When news hit that Trump might be arrested last month, AI images depicting Trump’s arrest went viral. Many people thought the images were real, confusing fabrication with news.

We’ve seen this play out before. Remember the Nancy Pelosi deepfake that made the then-Speaker sound slurred and drunk? Thousands—maybe millions—of people probably still think that it was real. More recently, a fake video went viral that depicted Elizabeth Warren saying that Republicans shouldn’t have the right to vote.

The lines between fact and fiction are blurring. In one of the most bizarre examples, people on Reddit are making up stories of events that never happened. The below images from Justine Moore look real, and they’re supposedly from the Great Cascadia Earthquake that devastated Oregon in 2001. But that earthquake never happened; that event doesn’t exist. The images are AI-generated, used online to spread the story of a completely-fabricated natural disaster.

Language models also make things up. A model will occasionally “hallucinate,” confidently stating something that is inaccurate or even potentially dangerous. Earlier, we used the example of how some AI models are outperforming doctors in medical diagnoses. But what about when the AI is wrong? What about when it repeatedly insists that it’s correct, even when it’s not? Most people assume that these systems are sophisticated and intelligent. It’s likely that less-privileged groups—say, uninsured people who can’t get a professional medical opinion—will be hurt most here.

How will all this evolve?

One outcome may be that everyone becomes more skeptical and distrustful of news—you literally won’t be able to believe your eyes. A more hopeful effect may be some sort of “watermark” on AI-generated content—something that can tell us whether a paragraph or image has the fingerprints of AI on it. This is one area for more innovation as AI goes mainstream. Some early, though imperfect, solutions exist. Turnitin, a plagiarism checker, claims its tool can detect AI-written text with 96% accuracy, compared to OpenAI’s 26%. The downside, of course, is that some students may wrongly be accused of cheating.

Misinformation and AI-generated content will also fall to the responsibility of social platforms—check out Twitter’s disclaimer under this (fake) image:

The most-likely outcome here is a little bit of everything: developers working hard to reduce hallucination; platforms trying to minimize the spread of AI-instigated misinformation; and, unfortunately, a growing distrust of reality.

Who will capture the value being created?

In technology—and in business—distribution often wins. Look no further than the battle between Microsoft Teams and Slack; Microsoft neutralized Slack’s momentum by adding Teams to its Office 365 suite:

Or think of Oprah’s famous comment onstage at Apple TV+’s launch, succinctly summarizing why Apple would immediately become formidable in the streaming wars: “They’re in a billion pockets, ya’ll.”

Distribution wins.

That’s the pessimistic argument for how AI’s value creation will ultimately be distributed. Microsoft is building AI into its Office 385 suite, used by over a million businesses around the world; Google is building AI into Google Workspace, which now counts 2 billion monthly active users. How does a startup compete with that?

The optimistic view is that we’ve seen this movie before. When mobile took off a dozen years ago, much of the value accrued to Apple, Google, and Facebook. Of Apple’s $394B in 2022 revenue, 52% came from iPhone sales; as a standalone company, the iPhone would rank ~20th in the world by revenue, larger than AT&T, Microsoft, and Chevron. Google and Facebook, for their part, monopolize mobile advertising with over 50% combined market share. But mobile also gave rise to net-new companies: Uber leveraged geolocation to become a $63B public company; Instagram used mobile cameras and TikTok used mobile video to amass 2B and 1B users, respectively (and likely $100B standalone businesses); and the pattern continues across sectors—Spotify in music, Robinhood in investing, WhatsApp in messaging, and so on.

It’s the same story in cloud: much of the value was vacuumed up by Amazon’s AWS, Microsoft’s Azure, and established companies like Salesforce. Yet we also got dozens of new startups. Just look at the Cloud 100.

I expect AI will play out the same way. OpenAI may be the AWS equivalent here, an infrastructure layer upon which others build; perhaps Anthropic is Azure or Google Cloud in this analogy. But we’re seeing massive changes in user experiences and interfaces. Even Google Search is at risk. This creates an opening for new entrants at the application layer that are AI-native.

The go-to moats in technology, like network effects, will still apply. Focus will be key. One reason startups win is that they’re specialized to start. They build 10x-better products, often for a highly-specific user to begin with. To return to examples above, focusing on AI executive assistants or AI lawyers might be an advantage; better specialization fine-tunes both the AI models and the business models over time, creating compounding advantages. Startups can then layer on products over time to drive up contract values and improve net dollar retention.

A final advantage startups have: agility. Big Tech moves slowly. Earlier this year, four leading AI researchers left Google to found Mobius. These researchers were behind Google’s text-to-image diffusion models. I’m confident they’ll be able to move faster and more nimbly outside of Google than within it. Similarly, Dust is a platform for large language model applications founded by former OpenAI employees; the same benefits from speed and flexibility will exist there. These are critical advantages that entrepreneurs can capitalize on.

Yes, value will accrue to Microsoft and Google. The big will get bigger. But there will also be plenty of opportunities (many hard to predict right now) for emerging players to be AI-native and to create enormous value over the next decade.

Final Thoughts

In the 1980s, Dunkin’ Donuts released a famous commercial that came to be known as “Time to Make the Donuts.” In the ad, a middle-aged man drags himself out of bed in the wee hours of the morning, puts on his Dunkin’ Donuts uniform, and groans, “Time to make the donuts.” He shuffles out the door, and the commercial cuts to him returning home, bone-tired and muttering, “Made the donuts.” The cycle repeats again, and again, and again. The commercial was so popular that Dunkin’ made over a hundred different versions.

When we think of technology replacing work, we think of this kind of work—monotonous, thankless tasks. And traditionally, this is the type of work that innovation has targeted. In the early years of America’s manufacturing boom, one worker on Henry Ford’s assembly line complained:

“It don’t stop. It just goes and goes and goes. I bet there’s men who have lived and died out there, never seen the end of that line. And they never will—because it’s endless. It’s like a serpent. It’s just all body, no tail.”

Much of that assembly line is now automated. This came at the cost of some jobs, yes, but it also freed up workers to focus on more fulfilling, interesting work.

What’s so interesting about this moment in time is that it’s the humanness of the work that’s being disrupted. AI can now do many knowledge worker tasks better than we can; one study even showed that AI is already as creative as we are. As a result, generative AI will create a dislocation in the labor markets. It might take some time to show itself, but long term, millions of jobs will either be complemented by AI or outright substituted with it. Worker retraining will be essential.

We’re also going to see generative AI continue blurring the lines of fact and fiction, requiring a healthy dose of skepticism from everyone. We’re still in the top of the first inning here, and thoughtful policy, moderation, and innovation will be required to ensure that rampant AI-generated misinformation doesn’t wreak havoc.

When it comes to value creation, the jury is also out. My take is that Big Tech will reap many rewards, but that there’s plenty of value to be created and captured by upstarts. AI’s application layer is barely getting going, and this is a unique moment for new user experiences and behaviors to calcify. When that happens, big companies usually follow.

It’s the optimistic view that keeps me energized: AI is augmenting what humans are capable of. That said, it’s a scary time. Innovation is scary. Change is often met with backlash. Even the humble calculator faced headwinds:

Innovation is somewhat predictable in its patterns of adoption and disruption. We’ve seen major revolutions before—in agriculture, in industry, in information technology. Expect near-term pain while we adjust to the AI revolution, but long-term gains in productivity and quality of life.

Sources & Additional Reading:

There have been some great recent pieces about AI in mainstream publications like The Atlantic (by Jacob Stern) and The New York Times (by Ezra Klein)

I’ve enjoyed pieces on AI from some of my favorite writers, including recent ones from Ben Thompson, Packy McCormick, and Mario Gabriele

You can read OpenAI’s announcement of plugins here

Bill Gates’s piece The Age of AI is here

Nick St. Pierre has many of the great visuals above on Midjourney v5 in a Twitter thread here

Watch the 10 finalists in Runway’s generative AI film festival here

I enjoyed Derek Thompson’s recent writings about AI and work

Here is Goldman Sachs’s study on AI and jobs, and here is OpenAI’s

Related Digital Native Pieces

Here is January’s deep-dive into AI: AI in 2023: The Application Layer Has Arrived

Thanks for reading! Subscribe here to receive Digital Native in your inbox each week: