The Future of Technology Looks a Lot Like...Pixar?

Pixar's 3D Technology Will Power Vision Pro's Application Layer

Weekly writing about how technology shapes humanity and vice versa. If you haven’t subscribed, join 50,000 weekly readers by subscribing here:

The Future of Technology Looks a Lot Like...Pixar?

For 2001’s Monsters, Inc., Pixar faced the challenge of animating fur. The character Sully, for instance, has nearly three million hairs on his body. Animating each individual strand would be impossible, so Pixar animators instead created a program called Fizt that could simulate the movement of Sully’s hairs.

Fizt was later used to simulate the realistic movement of fur on characters like Remy the rat (2007’s Ratatouille) and Dug the dog (2009’s Up).

Each Pixar film has brought new challenges and new innovations in computer animation.

There was a good reason that Pixar’s first film centered around a group of toys: in 1995, humans were too difficult to animate. Human characters in Toy Story, like Andy and his mom, appear sparingly and are often shown by just their hands or feet. It would be another nine years before Pixar released a film featuring humans as main characters—2004’s The Incredibles, the studio’s sixth feature.

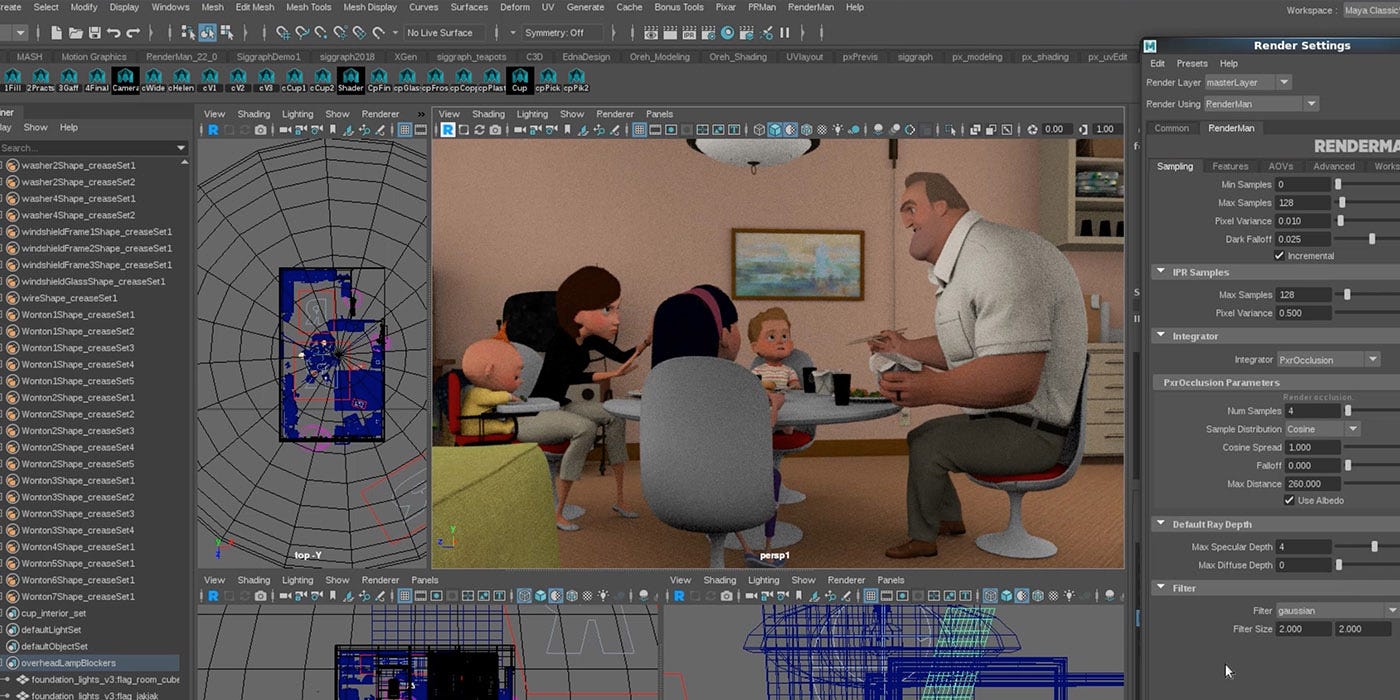

For The Incredibles, Pixar designed a type of muscle rig in a program called Goo, allowing a character’s skin to respond to moving muscles. A long-term challenge for Pixar had been animating shoulders, which have complex movements—as workarounds, Buzz Lightyear was given shoulders that consist of a ball and socket, while Woody was given a stitch where his arm meets his shoulder, allowing for easier animation. With Goo, though, animators could finally animate accurate shoulder movements by simulating how the body’s muscles work in concert.

(The Incredibles didn’t master everything about human animation. The superhero outfits—insert Edna voice: “So you want a suiiiitttt?”—were animated like skin, glued to character’s bodies. Only in The Incredibles 2 were the super-suits animated like clothing, with more fluid and natural movements.)

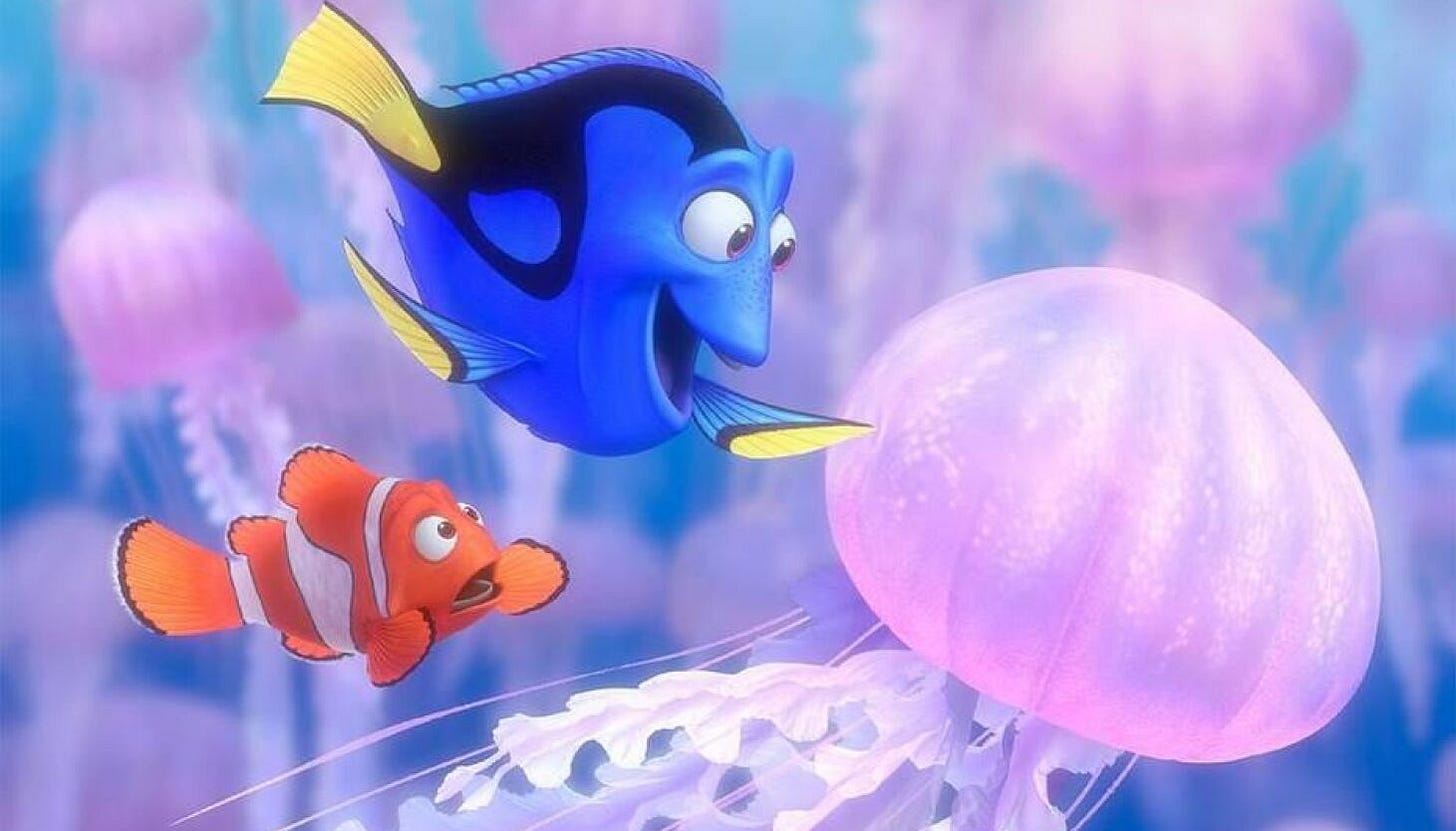

Other Pixar films brought their own unique challenges. Finding Nemo brought the challenge of animating water; a particularly difficult sequence was animating the scene with jellyfish, as animators wanted light to pass through the jellyfish membranes just as light passes through bathroom glass, “distorted and blurry.” Inside Out brought the challenge of animating the main character Joy, who is composed of glowing particles that radiate off her skin.

Cars brought the challenge of animating reflective surfaces on each vehicle, a tricky effect to master. That same concept was later applied to the shiny surfaces of toys in Toy Story sequels, with dramatically better animation than the original film.

As a result of Pixar’s need to constantly push the boundaries of animation, technology breakthroughs began to stream out of the Emeryville-based studio.

Pixar’s most famous technology, RenderMan, became Hollywood’s industry standard for rendering and the first software product to win an Oscar. For decades, RenderMan has been a go-to tool across Hollywood—the liquid-metal T-1000 in Terminator 2, the dinosaurs in Jurassic Park, and Gollum in Lord of the Rings all owe their lives to RenderMan. (RenderMan is available as a commercial product licensed to third parties.)

As the world moves to more immersive, three-dimensional content, it makes sense that the road forward will be paved with bricks laid down by Pixar’s three decades of cutting-edge computer animation.

And that’s exactly what’s happening.

The Alliance for OpenUSD

The most important news in tech last week—in my mind—was the creation of the Alliance for OpenUSD. Apple, in preparation for its Vision Pro launch next year, announced that it’s partnering with Pixar, Nvidia, Adobe, and Autodesk to create a new alliance that will “drive the standardization, development, evolution, and growth” of Pixar’s Universal Scene Description (USD) technology.

USD is a technology developed by Pixar that’s become essential in building 3D content. Essentially, USD is open-source software that lets developers move their work across various 3D creation tools, unlocking use cases ranging from animation (where it began) to visual effects to gaming. Creating 3D content involves a lot of intricacies: modeling, shading, lighting, rendering. It also involves a lot of data, but that data is difficult to port across applications. The newly-formed alliance effectively solves this problem, creating a shared language around packaging, assembling, and editing 3D data.

In Nvidia’s words: “USD should serve as the HTML of the metaverse: the declarative specification of the contents of a website.”

This alliance is a key step to realizing Apple’s vision of a robust application ecosystem built on Vision Pro.

Apple Vision Pro’s Application Layer

Technology follows a reliable march toward more immersive content.

I’ve written about this before, most recently in May’s 3D Content and the Floodgates of Production. From that piece:

We saw this march offline, with books giving way to radio, which in turn gave way to film and television. And we’ve seen the pattern repeat online: Twitter was text-based; Instagram popularized photo-sharing; TikTok is built around video. Now, every platform is scrambling to be video-first. Each major platform gets progressively more immersive.

An early example of 3D content is Roblox. While TikTok is running circles around YouTube in daily engagement (113 minutes per day vs. 77 minutes per day, for daily actives), Roblox trumps them both at a staggering 190 minutes per day. That figure is up +90% since 2020. Young people—50% of Roblox users are 12 or under—are becoming accustomed to building and interacting with 3D content.

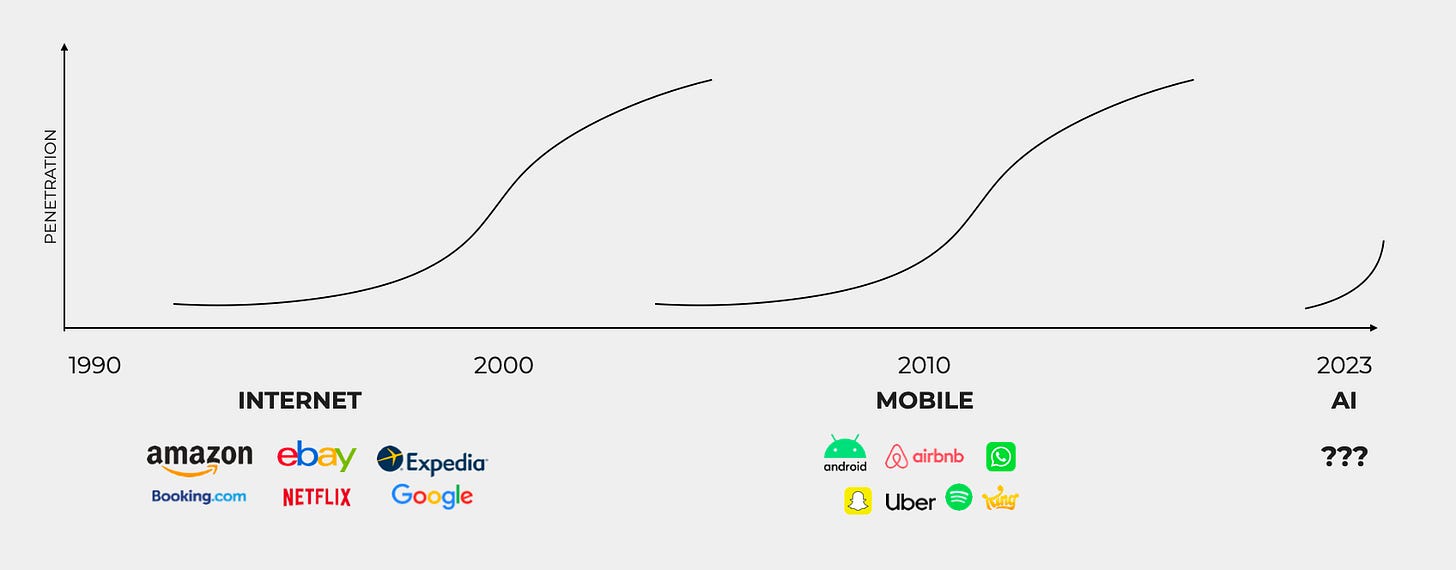

But Roblox is just the tip of the spear. We’ll see more applications built for 3D content, particularly once Vision Pro launches and comes down in price. Past technology epochs like the internet and mobile catalyzed the creation of valuable businesses—these early years of Mixed Reality will be no different.

This is clearly a major focus of Apple’s. The company’s VP of the vision products group, Mike Rockwell, said in the OpenUSD press release: “OpenUSD will help accelerate the next generation of AR experiences, from artistic creation to content delivery, and produce an ever-widening array of spatial computing applications.” This is one reason Apple announced the Vision Pro nearly a year before release; it needs to give third-party developers the time to come up with a killer app. (Beat Saber on Oculus is nice, but it’s not going to be the kind of app that powers a device to 1B+ users rather than stagnating in the 10-100M range. This is a key question: is Vision Pro a console-like product, or an iPhone-like product in the scale of its mainstream adoption?)

The emergence of the VR/AR application layer is exciting stuff. We’re all going to become consumers of richer experiences online, and the aperture to being a 3D developer will widen.

I’ve been spending a lot of time on r/Blender, the subreddit community for the Blender open-source 3D software. Blender, which was initially developed in 1994 (actually by another animation studio), leaves many developers wanting.

The same story holds for other 3D modeling software, like Maya. Whenever there’s such negative sentiment on a Reddit community around a product, there’s usually a startup opportunity.

What are the next-generation products that need to be built? Perhaps a Unity or Unreal for non-gaming use cases. Perhaps more intuitive and accessible creative tools.

3D content creation needs to become more mainstream. If Snap’s augmented reality dancing hot dog was what we could create in 2014, what can we create in 2024? Hopefully creations much more powerful and robust.

Similar to Pixar, Weta Digital has been behind major innovations in movie-making technology. Peter Jackson’s New Zealand-based visual effects studio—which was recently scooped up by Unity for $1.6B—designed programs like MASSIVE to simulate large groups of people (MASSIVE stands for Multiple Agent Simulation System in Virtual Environment) and Lumberjack, a tree creation system used to digitally render vast forests. Other Weta products are similar to those developed at Pixar: Wig is simulation and modeling software for how fur moves, used in King Kong and the Planet of the Apes movies, while Tissue maps how computer-generated characters should move by imagining how their tissues and ligaments would behave.

What happens when these tools become accessible to your everyday user, not just to visual effects experts? When anyone can create large armies or hyper-realistic CGI characters using intuitive software? When generative AI can make development even easier?

Members of r/Blender won’t be complaining about steep learning curves—and subreddits like that won’t be limited to technical, diehard developers. More people will be able to create powerful 3D experiences that become the lifeblood of the next era of the internet.

Enter: Generative AI

Earlier this year, I created my own Pixar character by sketching together a few generative AI tools. Following my friend Justine’s instructions, I first uploaded a photo of myself to Midjourney, then added the prompt, “Male Pixar character, blond-brown hair and green eyes.” Here’s what Midjourney delivered:

A bit more Disney Animation than Pixar, but not bad. I then uploaded the image into HeyGen, which turned it into a video. As the last step, I uploaded 60 seconds of my recorded voice to Eleven Labs, wrote out a script, and used the AI to recreate the sound of my voice to the words of the script. At the end, I had a moving, talking Pixar character.

The entire process took less than five minutes, and was entirely free. Pretty cool.

Generative AI tools will collide with mixed reality tools to supercharge what everyday people can make. The chart of technology inflections above can be mirrored for AI’s budding application ecosystem.

This is an exciting moment, with multiple breakthrough technologies catching fire. The next few years will be interesting. Imagine writing the prompt, “Create a massive army of humans, elves and dwarves; set it in Mordor; and wage a battle against an army of orcs”—then experiencing the entire thing in virtual reality. This brings the high-end 3D content tools mentioned above (Weta’s MASSIVE product) into the mainstream, colliding with AI-powered tools. And all of it might happen through the forthcoming Vision Pro.

Final Thoughts: Remixed IP & the Fragmentation of Culture

Two interesting offshoots of the above—

The Remixing of IP

The Fragmentation of Culture

How do mixed reality and AI change the IP landscape? As content proliferates, intellectual property becomes more valuable. Think of what’s happened over the past few years. As more and more content competes for our attention (both user-generated content and professionally-produced content—there were 599 English-language scripted TV shows in 2022), consumers gravitate to known properties.

Think Disney’s savvy acquisitions—Marvel, Star Wars, Pixar, The Simpsons. Or think Barbie and the 45 (!) other films Mattel has in the works based on toy brands, including Hot Wheels, Uno, Polly Pocket, and Rock ‘Em Sock ‘Em Robots.

What will be interesting to see is how IP owners license out content in a world of proliferating, AI-powered creation.

The music industry may show an indication of how things will unfold. Instead of suing, Google and Universal Music are in talks to license artists’ melodies and voices for AI-generated songs. The companies would look to develop a tool for fans to create tracks legitimately, and pay the copyright owner; artists would have the right to opt in.

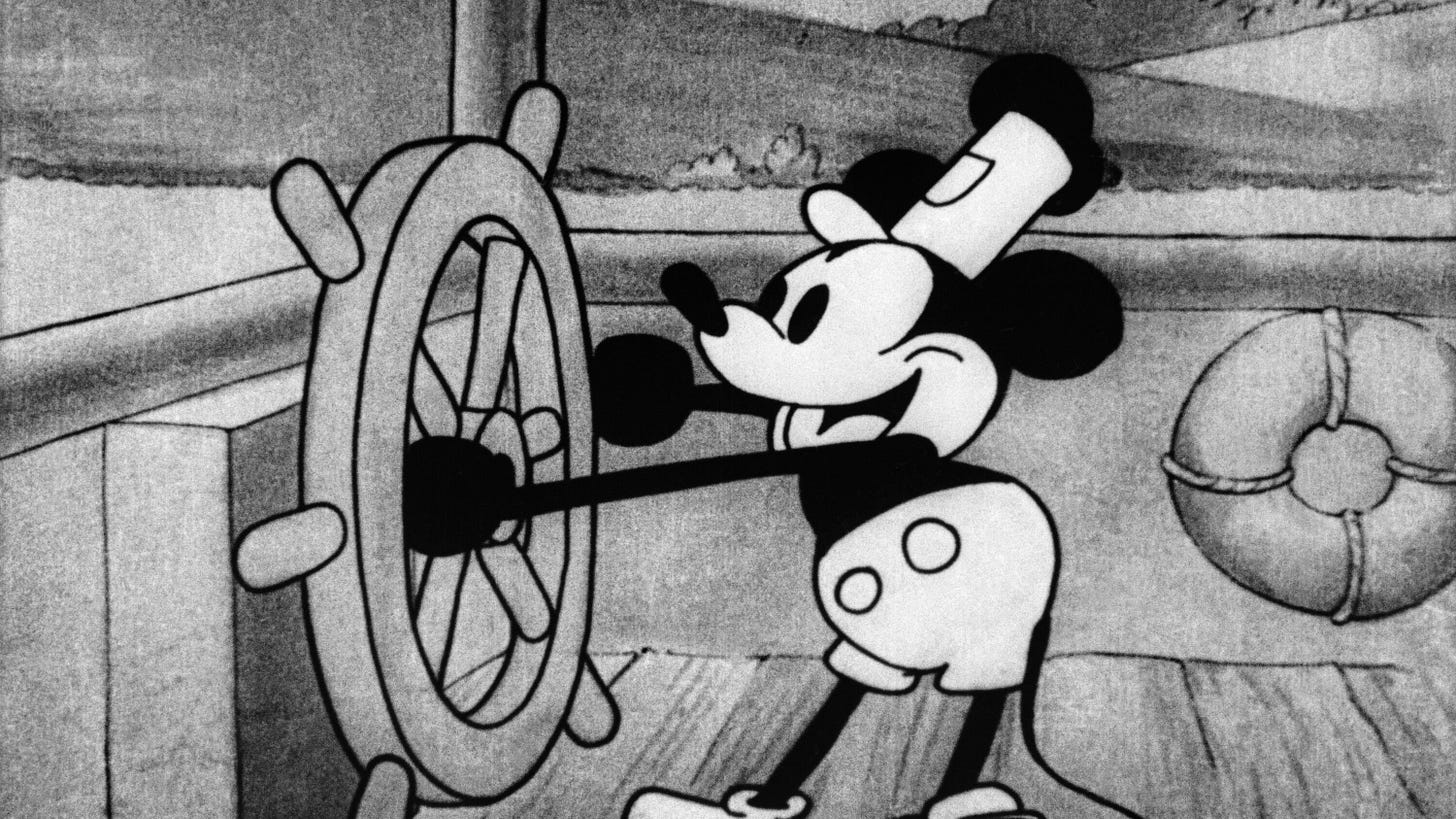

To return to Disney, the media giant has an important copyright expiring at the end of the year: Mickey Mouse. When Walt Disney created Mickey Mouse, copyright law allowed a maximum of 56 years of protection. This meant that Mickey would fall into the public domain in 1984, 56 years after the character’s creation in 1928. As the deadline approached, Disney lobbied and copyright law was extended to 75 years. As 2003 approached (the 75-year deadline), Disney again lobbied and got Mickey’s copyright extended to 95 years. Right now, Mickey is set to enter the public domain in 2024. (To be clear, this copyright extends only to the earliest version of Mickey—the gloveless Steamboat Willie version.)

Winnie the Pooh entered the public domain in 2022, leading to the horror film Winnie the Pooh: Blood and Honey.

What happens when anyone can create AI-generated, mixed reality stories using valuable IP? On the one hand, publicly-available IP will become more valuable; on the other, privately-owned IP will become a lucrative new licensing stream for companies. I’m sure IP owners and artists will balk; more than 4,000 writers, include Margaret Atwood and Jodi Picoult, have already signed a letter to the CEOs of OpenAI, Google, Microsoft, and Meta accusing the companies of exploiting them by developing chatbots that “mimic and regurgitate” their language and style. When all IP becomes remixable with AI, such petitions will become more common.

The second offshoot: the fragmentation of culture. The internet broke open distribution; generative AI is set to break open creation. Both greased the wheels of cultural production. In addition to Hollywood films and network TV shows, we got 30,000 hours of video uploaded to YouTube each minute and 49,000 new songs uploaded to Spotify every day.

And both broader distribution and broader creation result in a fragmented cultural lingua franca. We’ve come a long way from three TV channels. Internet culture is now culture writ large, and internet culture is definitionally non-mainstream. Internet culture is messy and chaotic. Internet culture movies at a torrid clip, and thumbs its nose at content catered to the cultural common denominator.

Here’s a stunning stat: more than 39,000 accounts on TikTok now have at least 1M followers. This creates “niche fame” where within a specific following, a creator may be a rockstar, but more broadly, that same creator may be completely unknown. New content formats tend to usher in new celebrities. As more of us are able to make things with better creative tooling and new distribution platforms, culture will continue to fragment.

The next few years will bring big shifts in mixed reality and AI, and possibly a new product to underpin how we experience the internet. We’ll all be Pixar animators, developing stunning 3D creations, and we’ll all be Pixar consumers, taking in an ever-growing flood of high-quality, experiential content.

Sources & Additional Reading

Apple Announces the Alliance for OpenUSD | The Verge

How Every Pixar Movie Advanced Animation | Insider

Thanks for reading! Subscribe here to receive Digital Native in your inbox each week: